- [ ] I have marked all applicable categories:

+ [x] exception-raising fix

+ [ ] algorithm implementation fix

+ [ ] documentation modification

+ [ ] new feature

- [ ] I have reformatted the code using `make format` (**required**)

- [ ] I have checked the code using `make commit-checks` (**required**)

- [ ] If applicable, I have mentioned the relevant/related issue(s)

- [ ] If applicable, I have listed every items in this Pull Request

below

I'm developing a new PettingZoo environment. It is a two players turns

board game.

```

obs_space = dict(

board = gym.spaces.MultiBinary([8, 8]),

player = gym.spaces.Tuple([gym.spaces.Discrete(8)] * 2),

other_player = gym.spaces.Tuple([gym.spaces.Discrete(8)] * 2)

)

self._observation_space = gym.spaces.Dict(spaces=obs_space)

self._action_space = gym.spaces.Tuple([gym.spaces.Discrete(8)] * 2)

...

# this cache ensures that same space object is returned for the same

agent

# allows action space seeding to work as expected

@functools.lru_cache(maxsize=None)

def observation_space(self, agent):

# gymnasium spaces are defined and documented here:

https://gymnasium.farama.org/api/spaces/

return self._observation_space

@functools.lru_cache(maxsize=None)

def action_space(self, agent):

return self._action_space

```

My test is:

```

def test_with_tianshou():

action = None

# env = gym.make('qwertyenv/CollectCoins-v0', pieces=['rock', 'rock'])

env = CollectCoinsEnv(pieces=['rock', 'rock'], with_mask=True)

def another_action_taken(action_taken):

nonlocal action

action = action_taken

# Wrapping the original environment as to make sure a valid action will

be taken.

env = EnsureValidAction(

env,

env.check_action_valid,

env.provide_alternative_valid_action,

another_action_taken

)

env = PettingZooEnv(env)

policies = MultiAgentPolicyManager([RandomPolicy(), RandomPolicy()],

env)

env = DummyVectorEnv([lambda: env])

collector = Collector(policies, env)

result = collector.collect(n_step=200, render=0.1)

```

I have also a wrapper that may be redundant as of Tianshou capability to action_mask, yet it is still part of the code:

```

from typing import TypeVar, Callable

import gymnasium as gym

from pettingzoo.utils.wrappers import BaseWrapper

Action = TypeVar("Action")

class ActionWrapper(BaseWrapper):

def __init__(self, env: gym.Env):

super().__init__(env)

def step(self, action):

action = self.action(action)

self.env.step(action)

def action(self, action):

pass

def render(self, *args, **kwargs):

self.env.render(*args, **kwargs)

class EnsureValidAction(ActionWrapper):

"""

A gym environment wrapper to help with the case that the agent wants to

take invalid actions.

For example consider a Chess game, where you let the action_space be any

piece moving to any square on the board,

but then when a wrong move is taken, instead of returing a big negative

reward, you just take another action,

this time a valid one. To make sure the learning algorithm is aware of

the action taken, a callback should be provided.

"""

def __init__(self, env: gym.Env,

check_action_valid: Callable[[Action], bool],

provide_alternative_valid_action: Callable[[Action], Action],

alternative_action_cb: Callable[[Action], None]):

super().__init__(env)

self.check_action_valid = check_action_valid

self.provide_alternative_valid_action = provide_alternative_valid_action

self.alternative_action_cb = alternative_action_cb

def action(self, action: Action) -> Action:

if self.check_action_valid(action):

return action

alternative_action = self.provide_alternative_valid_action(action)

self.alternative_action_cb(alternative_action)

return alternative_action

```

To make above work I had to patch a bit PettingZoo (opened a pull-request there), and a small patch here (this PR).

Maybe I'm doing something wrong, yet I fail to see it.

With my both fixes of PZ and of Tianshou, I have two tests, one of the environment by itself, and the other as of above.

Changes:

- Disclaimer in README

- Replaced all occurences of Gym with Gymnasium

- Removed code that is now dead since we no longer need to support the

old step API

- Updated type hints to only allow new step API

- Increased required version of envpool to support Gymnasium

- Increased required version of PettingZoo to support Gymnasium

- Updated `PettingZooEnv` to only use the new step API, removed hack to

also support old API

- I had to add some `# type: ignore` comments, due to new type hinting

in Gymnasium. I'm not that familiar with type hinting but I believe that

the issue is on the Gymnasium side and we are looking into it.

- Had to update `MyTestEnv` to support `options` kwarg

- Skip NNI tests because they still use OpenAI Gym

- Also allow `PettingZooEnv` in vector environment

- Updated doc page about ReplayBuffer to also talk about terminated and

truncated flags.

Still need to do:

- Update the Jupyter notebooks in docs

- Check the entire code base for more dead code (from compatibility

stuff)

- Check the reset functions of all environments/wrappers in code base to

make sure they use the `options` kwarg

- Someone might want to check test_env_finite.py

- Is it okay to allow `PettingZooEnv` in vector environments? Might need

to update docs?

This allows, for instance, to change the action registered into the

buffer when the environment modify the action.

Useful in offline learning for instance, since the true actions are in a

dataset and the actions of the agent are ignored.

- [ ] I have marked all applicable categories:

+ [ ] exception-raising fix

+ [ ] algorithm implementation fix

+ [ ] documentation modification

+ [X] new feature

- [X ] I have reformatted the code using `make format` (**required**)

- [X] I have checked the code using `make commit-checks` (**required**)

- [] If applicable, I have mentioned the relevant/related issue(s)

- [X] If applicable, I have listed every items in this Pull Request

below

## implementation

I implemented HER solely as a replay buffer. It is done by temporarily

directly re-writing transitions storage (`self._meta`) during the

`sample_indices()` call. The original transitions are cached and will be

restored at the beginning of the next sampling or when other methods is

called. This will make sure that. for example, n-step return calculation

can be done without altering the policy.

There is also a problem with the original indices sampling. The sampled

indices are not guaranteed to be from different episodes. So I decided

to perform re-writing based on the episode. This guarantees that the

sampled transitions from the same episode will have the same re-written

goal. This also make the re-writing ratio calculation slightly differ

from the paper, but it won't be too different if there are many episodes

in the buffer.

In the current commit, HER replay buffer only support 'future' strategy

and online sampling. This is the best of HER in term of performance and

memory efficiency.

I also add a few more convenient replay buffers

(`HERVectorReplayBuffer`, `HERReplayBufferManager`), test env

(`MyGoalEnv`), gym wrapper (`TruncatedAsTerminated`), unit tests, and a

simple example (examples/offline/fetch_her_ddpg.py).

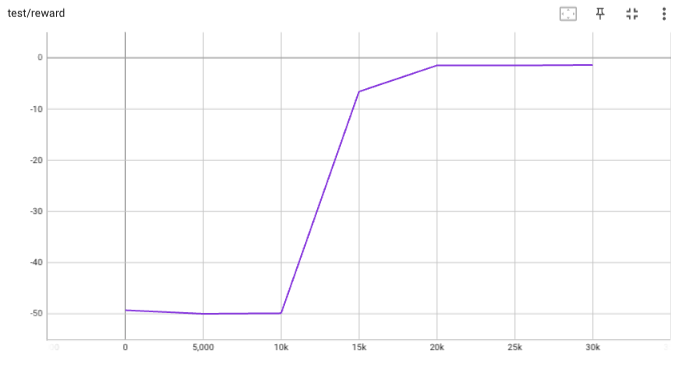

## verification

I have added unit tests for almost everything I have implemented.

HER replay buffer was also tested using DDPG on [`FetchReach-v3`

env](https://github.com/Farama-Foundation/Gymnasium-Robotics). I used

default DDPG parameters from mujoco example and didn't tune anything

further to get this good result! (train script:

examples/offline/fetch_her_ddpg.py).

* Changes to support Gym 0.26.0

* Replace map by simpler list comprehension

* Use syntax that is compatible with python 3.7

* Format code

* Fix environment seeding in test environment, fix buffer_profile test

* Remove self.seed() from __init__

* Fix random number generation

* Fix throughput tests

* Fix tests

* Removed done field from Buffer, fixed throughput test, turned off wandb, fixed formatting, fixed type hints, allow preprocessing_fn with truncated and terminated arguments, updated docstrings

* fix lint

* fix

* fix import

* fix

* fix mypy

* pytest --ignore='test/3rd_party'

* Use correct step API in _SetAttrWrapper

* Format

* Fix mypy

* Format

* Fix pydocstyle.

fixes some deprecation warnings due to new changes in gym version 0.23:

- use `env.np_random.integers` instead of `env.np_random.randint`

- support `seed` and `return_info` arguments for reset (addresses https://github.com/thu-ml/tianshou/issues/605)

(Issue #512) Random start in Collector sample actions from the action space, while policies output action in a range (typically [-1, 1]) and map action to the action space. The buffer only stores unmapped actions, so the actions randomly initialized are not correct when the action range is not [-1, 1]. This may influence policy learning and particularly model learning in model-based methods.

This PR fixes it by adding an inverse operation before adding random initial actions to the buffer.

- collector.collect() now returns 4 extra keys: rew/rew_std/len/len_std (previously this work is done in logger)

- save_fn() will be called at the beginning of trainer

- Batch: do not raise error when it finds list of np.array with different shape[0].

- Venv's obs: add try...except block for np.stack(obs_list)

- remove venv.__del__ since it is buggy

Change the behavior of to_numpy and to_torch: from now on, dict is automatically converted to Batch and list is automatically converted to np.ndarray (if an error occurs, raise the exception instead of converting each element in the list).

This PR focus on refactor of logging method to solve bug of nan reward and log interval. After these two pr, hopefully fundamental change of tianshou/data is finished. We then can concentrate on building benchmarks of tianshou finally.

Things changed:

1. trainer now accepts logger (BasicLogger or LazyLogger) instead of writer;

2. remove utils.SummaryWriter;

This PR focus on some definition change of trainer to make it more friendly to use and be consistent with typical usage in research papers, typically change `collect-per-step` to `step-per-collect`, add `update-per-step` / `episode-per-collect` accordingly, and modify the documentation.

This is the third PR of 6 commits mentioned in #274, which features refactor of Collector to fix#245. You can check #274 for more detail.

Things changed in this PR:

1. refactor collector to be more cleaner, split AsyncCollector to support asyncvenv;

2. change buffer.add api to add(batch, bffer_ids); add several types of buffer (VectorReplayBuffer, PrioritizedVectorReplayBuffer, etc.)

3. add policy.exploration_noise(act, batch) -> act

4. small change in BasePolicy.compute_*_returns

5. move reward_metric from collector to trainer

6. fix np.asanyarray issue (different version's numpy will result in different output)

7. flake8 maxlength=88

8. polish docs and fix test

Co-authored-by: n+e <trinkle23897@gmail.com>

Throw a warning in ListReplayBuffer.

This version update is needed because of #289, the previous v0.3.1 cannot work well under torch<=1.6.0 with cuda environment.

This is the second commit of 6 commits mentioned in #274, which features minor refactor of ReplayBuffer and adding two new ReplayBuffer classes called CachedReplayBuffer and ReplayBufferManager. You can check #274 for more detail.

1. Add ReplayBufferManager (handle a list of buffers) and CachedReplayBuffer;

2. Make sure the reserved keys cannot be edited by methods like `buffer.done = xxx`;

3. Add `set_batch` method for manually choosing the batch the ReplayBuffer wants to handle;

4. Add `sample_index` method, same as `sample` but only return index instead of both index and batch data;

5. Add `prev` (one-step previous transition index), `next` (one-step next transition index) and `unfinished_index` (the last modified index whose done==False);

6. Separate `alloc_fn` method for allocating new memory for `self._meta` when a new `(key, value)` pair comes in;

7. Move buffer's documentation to `docs/tutorials/concepts.rst`.

Co-authored-by: n+e <trinkle23897@gmail.com>

This PR separates the `global_step` into `env_step` and `gradient_step`. In the future, the data from the collecting state will be stored under `env_step`, and the data from the updating state will be stored under `gradient_step`.

Others:

- add `rew_std` and `best_result` into the monitor

- fix network unbounded in `test/continuous/test_sac_with_il.py` and `examples/box2d/bipedal_hardcore_sac.py`

- change the dependency of ray to 1.0.0 since ray-project/ray#10134 has been resolved

Add an indicator(i.e. `self.learning`) of learning will be convenient for distinguishing state of policy.

Meanwhile, the state of `self.training` will be undisputed in the training stage.

Related issue: #211

Others:

- fix a bug in DDQN: target_q could not be sampled from np.random.rand

- fix a bug in DQN atari net: it should add a ReLU before the last layer

- fix a bug in collector timing

Co-authored-by: n+e <463003665@qq.com>