Closes #947 This removes all kwargs from all policy constructors. While doing that, I also improved several names and added a whole lot of TODOs. ## Functional changes: 1. Added possibility to pass None as `critic2` and `critic2_optim`. In fact, the default behavior then should cover the absolute majority of cases 2. Added a function called `clone_optimizer` as a temporary measure to support passing `critic2_optim=None` ## Breaking changes: 1. `action_space` is no longer optional. In fact, it already was non-optional, as there was a ValueError in BasePolicy.init. So now several examples were fixed to reflect that 2. `reward_normalization` removed from DDPG and children. It was never allowed to pass it as `True` there, an error would have been raised in `compute_n_step_reward`. Now I removed it from the interface 3. renamed `critic1` and similar to `critic`, in order to have uniform interfaces. Note that the `critic` in DDPG was optional for the sole reason that child classes used `critic1`. I removed this optionality (DDPG can't do anything with `critic=None`) 4. Several renamings of fields (mostly private to public, so backwards compatible) ## Additional changes: 1. Removed type and default declaration from docstring. This kind of duplication is really not necessary 2. Policy constructors are now only called using named arguments, not a fragile mixture of positional and named as before 5. Minor beautifications in typing and code 6. Generally shortened docstrings and made them uniform across all policies (hopefully) ## Comment: With these changes, several problems in tianshou's inheritance hierarchy become more apparent. I tried highlighting them for future work. --------- Co-authored-by: Dominik Jain <d.jain@appliedai.de>

Atari Environment

EnvPool

We highly recommend using envpool to run the following experiments. To install, in a linux machine, type:

pip install envpool

After that, atari_wrapper will automatically switch to envpool's Atari env. EnvPool's implementation is much faster (about 2~3x faster for pure execution speed, 1.5x for overall RL training pipeline) than python vectorized env implementation, and it's behavior is consistent to that approach (OpenAI wrapper), which will describe below.

For more information, please refer to EnvPool's GitHub, Docs, and 3rd-party report.

ALE-py

The sample speed is ~3000 env step per second (~12000 Atari frame per second in fact since we use frame_stack=4) under the normal mode (use a CNN policy and a collector, also storing data into the buffer).

The env wrapper is a crucial thing. Without wrappers, the agent cannot perform well enough on Atari games. Many existing RL codebases use OpenAI wrapper, but it is not the original DeepMind version (related issue). Dopamine has a different wrapper but unfortunately it cannot work very well in our codebase.

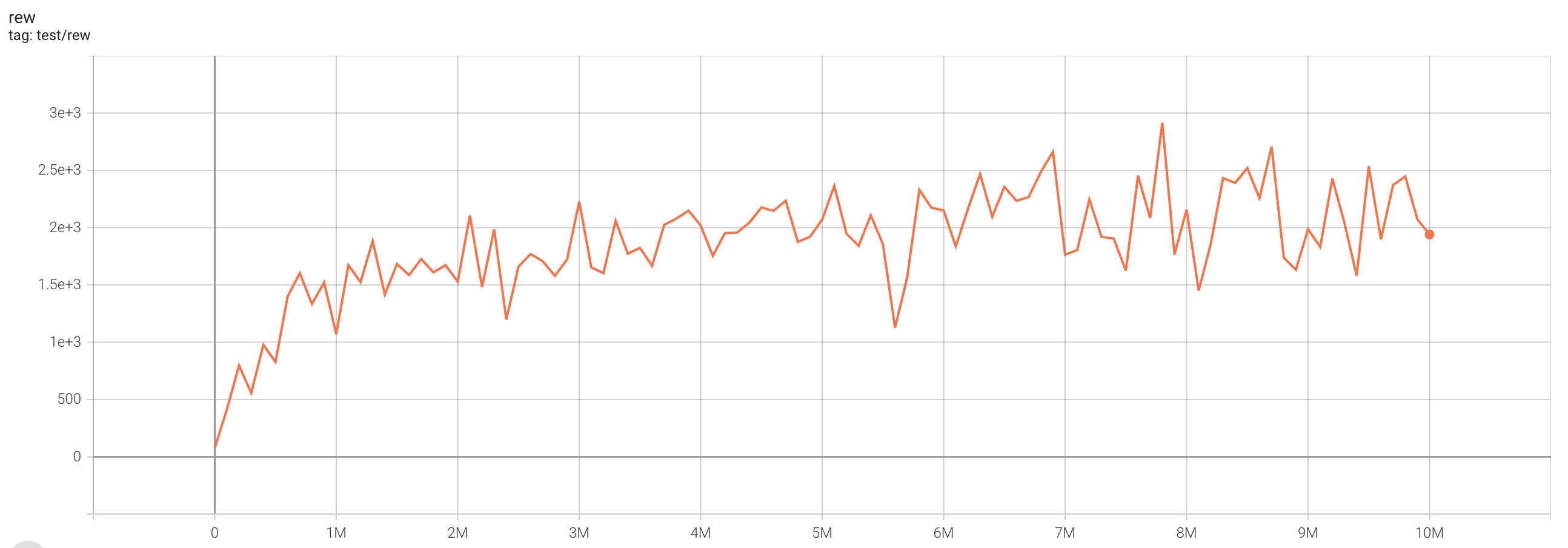

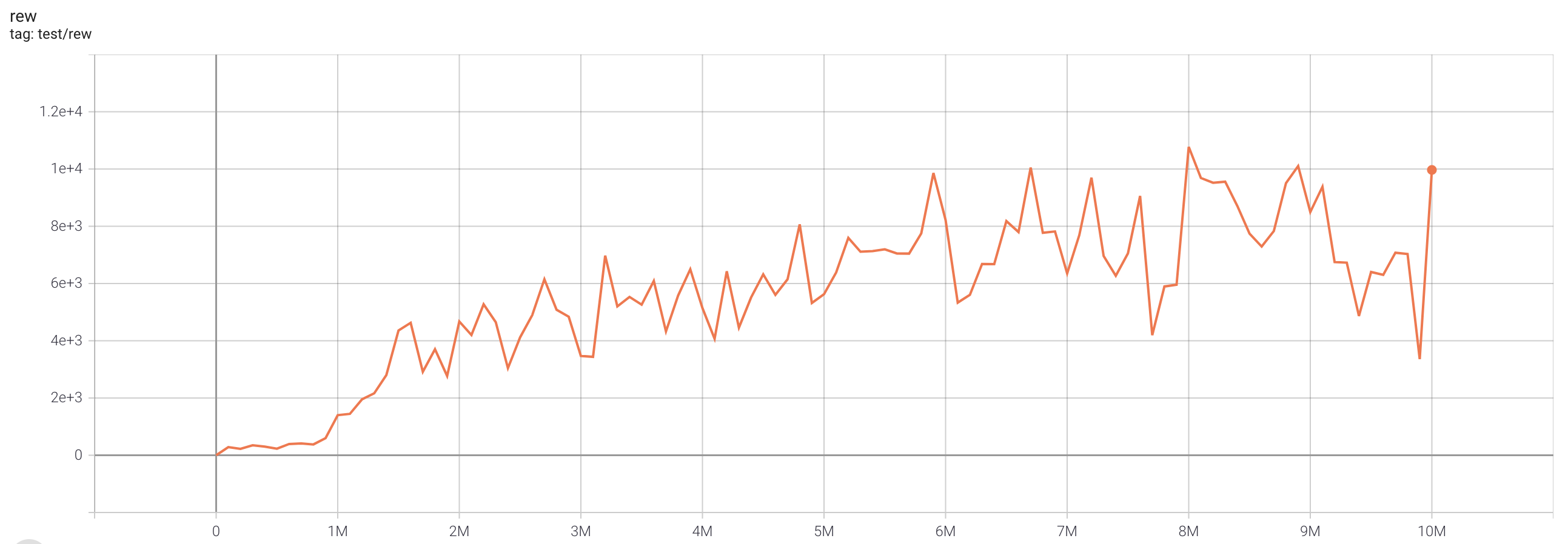

DQN (single run)

One epoch here is equal to 100,000 env step, 100 epochs stand for 10M.

Note: The eps_train_final and eps_test in the original DQN paper is 0.1 and 0.01, but some works found that smaller eps helps improve the performance. Also, a large batchsize (say 64 instead of 32) will help faster convergence but will slow down the training speed.

We haven't tuned this result to the best, so have fun with playing these hyperparameters!

C51 (single run)

One epoch here is equal to 100,000 env step, 100 epochs stand for 10M.

Note: The selection of n_step is based on Figure 6 in the Rainbow paper.

QRDQN (single run)

One epoch here is equal to 100,000 env step, 100 epochs stand for 10M.

IQN (single run)

One epoch here is equal to 100,000 env step, 100 epochs stand for 10M.

FQF (single run)

One epoch here is equal to 100,000 env step, 100 epochs stand for 10M.

Rainbow (single run)

One epoch here is equal to 100,000 env step, 100 epochs stand for 10M.

PPO (single run)

One epoch here is equal to 100,000 env step, 100 epochs stand for 10M.

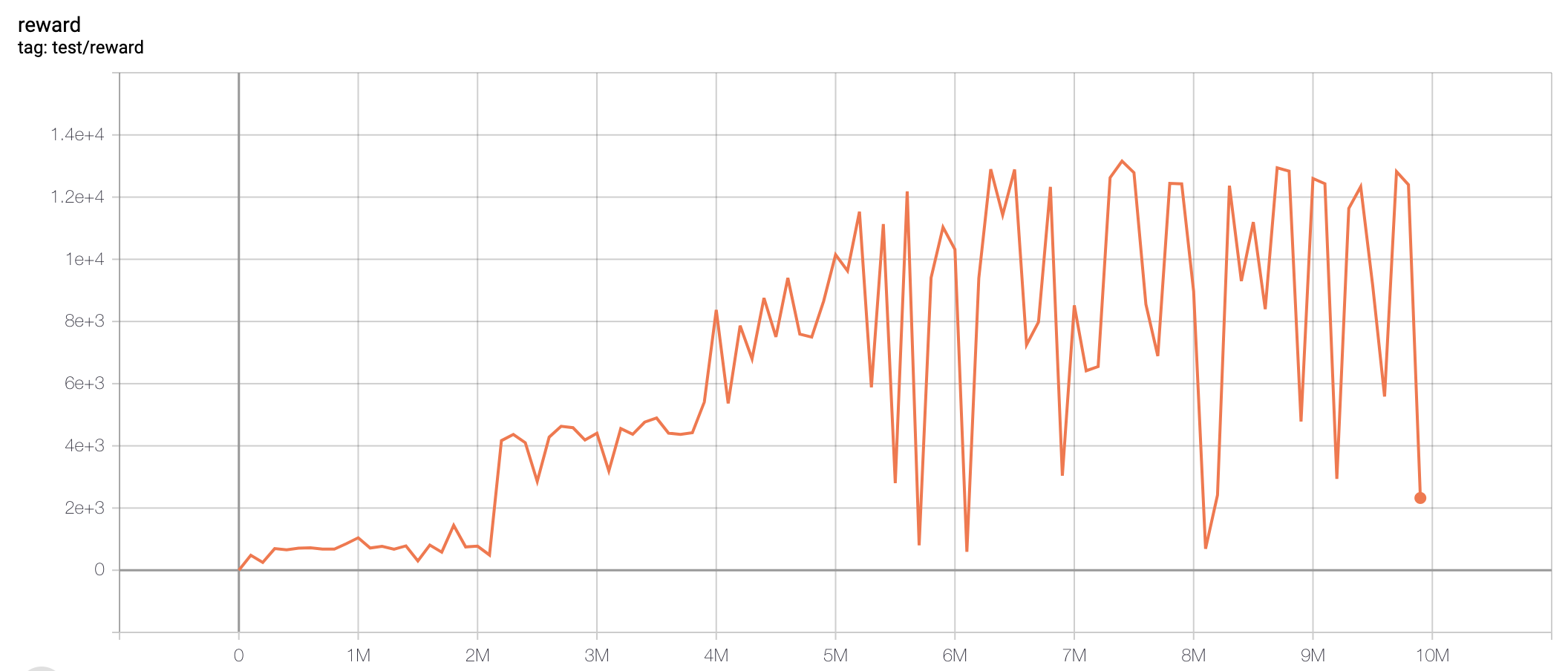

SAC (single run)

One epoch here is equal to 100,000 env step, 100 epochs stand for 10M.