## implementation

I implemented HER solely as a replay buffer. It is done by temporarily

directly re-writing transitions storage (`self._meta`) during the

`sample_indices()` call. The original transitions are cached and will be

restored at the beginning of the next sampling or when other methods is

called. This will make sure that. for example, n-step return calculation

can be done without altering the policy.

There is also a problem with the original indices sampling. The sampled

indices are not guaranteed to be from different episodes. So I decided

to perform re-writing based on the episode. This guarantees that the

sampled transitions from the same episode will have the same re-written

goal. This also make the re-writing ratio calculation slightly differ

from the paper, but it won't be too different if there are many episodes

in the buffer.

In the current commit, HER replay buffer only support 'future' strategy

and online sampling. This is the best of HER in term of performance and

memory efficiency.

I also add a few more convenient replay buffers

(`HERVectorReplayBuffer`, `HERReplayBufferManager`), test env

(`MyGoalEnv`), gym wrapper (`TruncatedAsTerminated`), unit tests, and a

simple example (examples/offline/fetch_her_ddpg.py).

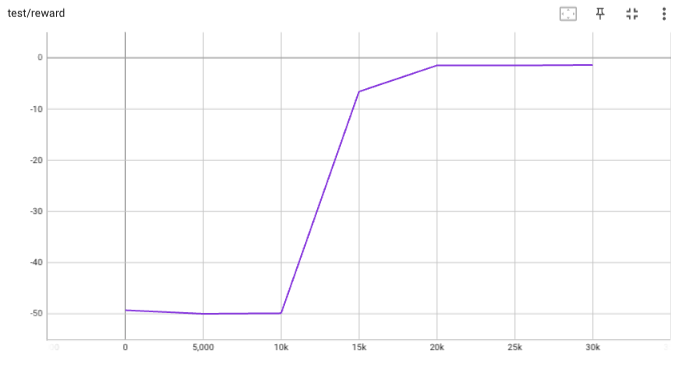

## verification

I have added unit tests for almost everything I have implemented.

HER replay buffer was also tested using DDPG on [`FetchReach-v3`

env](https://github.com/Farama-Foundation/Gymnasium-Robotics). I used

default DDPG parameters from mujoco example and didn't tune anything

further to get this good result! (train script:

examples/offline/fetch_her_ddpg.py).

Cherry-pick from #200

- update the function signature

- format code-style

- move _compile into separate functions

- fix a bug in to_torch and to_numpy (Batch)

- remove None in action_range

In short, the code-format only contains function-signature style and `'` -> `"`. (pick up from [black](https://github.com/psf/black))

- Refacor code to remove duplicate code

- Enable async simulation for all vector envs

- Remove `collector.close` and rename `VectorEnv` to `DummyVectorEnv`

The abstraction of vector env changed.

Prior to this pr, each vector env is almost independent.

After this pr, each env is wrapped into a worker, and vector envs differ with their worker type. In fact, users can just use `BaseVectorEnv` with different workers, I keep `SubprocVectorEnv`, `ShmemVectorEnv` for backward compatibility.

Co-authored-by: n+e <463003665@qq.com>

Co-authored-by: magicly <magicly007@gmail.com>

* make fileds with empty Batch rather than None after reset

* dummy code

* remove dummy

* add reward_length argument for collector

* Improve Batch (#126)

* make sure the key type of Batch is string, and add unit tests

* add is_empty() function and unit tests

* enable cat of mixing dict and Batch, just like stack

* bugfix for reward_length

* add get_final_reward_fn argument to collector to deal with marl

* minor polish

* remove multibuf

* minor polish

* improve and implement Batch.cat_

* bugfix for buffer.sample with field impt_weight

* restore the usage of a.cat_(b)

* fix 2 bugs in batch and add corresponding unittest

* code fix for update

* update is_empty to recognize empty over empty; bugfix for len

* bugfix for update and add testcase

* add testcase of update

* make fileds with empty Batch rather than None after reset

* dummy code

* remove dummy

* add reward_length argument for collector

* bugfix for reward_length

* add get_final_reward_fn argument to collector to deal with marl

* make sure the key type of Batch is string, and add unit tests

* add is_empty() function and unit tests

* enable cat of mixing dict and Batch, just like stack

* dummy code

* remove dummy

* add multi-agent example: tic-tac-toe

* move TicTacToeEnv to a separate file

* remove dummy MANet

* code refactor

* move tic-tac-toe example to test

* update doc with marl-example

* fix docs

* reduce the threshold

* revert

* update player id to start from 1 and change player to agent; keep coding

* add reward_length argument for collector

* Improve Batch (#128)

* minor polish

* improve and implement Batch.cat_

* bugfix for buffer.sample with field impt_weight

* restore the usage of a.cat_(b)

* fix 2 bugs in batch and add corresponding unittest

* code fix for update

* update is_empty to recognize empty over empty; bugfix for len

* bugfix for update and add testcase

* add testcase of update

* fix docs

* fix docs

* fix docs [ci skip]

* fix docs [ci skip]

Co-authored-by: Trinkle23897 <463003665@qq.com>

* refact

* re-implement Batch.stack and add testcases

* add doc for Batch.stack

* reward_metric

* modify flag

* minor fix

* reuse _create_values and refactor stack_ & cat_

* fix pep8

* fix reward stat in collector

* fix stat of collector, simplify test/base/env.py

* fix docs

* minor fix

* raise exception for stacking with partial keys and axis!=0

* minor fix

* minor fix

* minor fix

* marl-examples

* add condense; bugfix for torch.Tensor; code refactor

* marl example can run now

* enable tic tac toe with larger board size and win-size

* add test dependency

* Fix padding of inconsistent keys with Batch.stack and Batch.cat (#130)

* re-implement Batch.stack and add testcases

* add doc for Batch.stack

* reuse _create_values and refactor stack_ & cat_

* fix pep8

* fix docs

* raise exception for stacking with partial keys and axis!=0

* minor fix

* minor fix

Co-authored-by: Trinkle23897 <463003665@qq.com>

* stash

* let agent learn to play as agent 2 which is harder

* code refactor

* Improve collector (#125)

* remove multibuf

* reward_metric

* make fileds with empty Batch rather than None after reset

* many fixes and refactor

Co-authored-by: Trinkle23897 <463003665@qq.com>

* marl for tic-tac-toe and general gomoku

* update default gamma to 0.1 for tic tac toe to win earlier

* fix name typo; change default game config; add rew_norm option

* fix pep8

* test commit

* mv test dir name

* add rew flag

* fix torch.optim import error and madqn rew_norm

* remove useless kwargs

* Vector env enable select worker (#132)

* Enable selecting worker for vector env step method.

* Update collector to match new vecenv selective worker behavior.

* Bug fix.

* Fix rebase

Co-authored-by: Alexis Duburcq <alexis.duburcq@wandercraft.eu>

* show the last move of tictactoe by capital letters

* add multi-agent tutorial

* fix link

* Standardized behavior of Batch.cat and misc code refactor (#137)

* code refactor; remove unused kwargs; add reward_normalization for dqn

* bugfix for __setitem__ with torch.Tensor; add Batch.condense

* minor fix

* support cat with empty Batch

* remove the dependency of is_empty on len; specify the semantic of empty Batch by test cases

* support stack with empty Batch

* remove condense

* refactor code to reflect the shared / partial / reserved categories of keys

* add is_empty(recursive=False)

* doc fix

* docfix and bugfix for _is_batch_set

* add doc for key reservation

* bugfix for algebra operators

* fix cat with lens hint

* code refactor

* bugfix for storing None

* use ValueError instead of exception

* hide lens away from users

* add comment for __cat

* move the computation of the initial value of lens in cat_ itself.

* change the place of doc string

* doc fix for Batch doc string

* change recursive to recurse

* doc string fix

* minor fix for batch doc

* write tutorials to specify the standard of Batch (#142)

* add doc for len exceptions

* doc move; unify is_scalar_value function

* remove some issubclass check

* bugfix for shape of Batch(a=1)

* keep moving doc

* keep writing batch tutorial

* draft version of Batch tutorial done

* improving doc

* keep improving doc

* batch tutorial done

* rename _is_number

* rename _is_scalar

* shape property do not raise exception

* restore some doc string

* grammarly [ci skip]

* grammarly + fix warning of building docs

* polish docs

* trim and re-arrange batch tutorial

* go straight to the point

* minor fix for batch doc

* add shape / len in basic usage

* keep improving tutorial

* unify _to_array_with_correct_type to remove duplicate code

* delegate type convertion to Batch.__init__

* further delegate type convertion to Batch.__init__

* bugfix for setattr

* add a _parse_value function

* remove dummy function call

* polish docs

Co-authored-by: Trinkle23897 <463003665@qq.com>

* bugfix for mapolicy

* pretty code

* remove debug code; remove condense

* doc fix

* check before get_agents in tutorials/tictactoe

* tutorial

* fix

* minor fix for batch doc

* minor polish

* faster test_ttt

* improve tic-tac-toe environment

* change default epoch and step-per-epoch for tic-tac-toe

* fix mapolicy

* minor polish for mapolicy

* 90% to 80% (need to change the tutorial)

* win rate

* show step number at board

* simplify mapolicy

* minor polish for mapolicy

* remove MADQN

* fix pep8

* change legal_actions to mask (need to update docs)

* simplify maenv

* fix typo

* move basevecenv to single file

* separate RandomAgent

* update docs

* grammarly

* fix pep8

* win rate typo

* format in cheatsheet

* use bool mask directly

* update doc for boolean mask

Co-authored-by: Trinkle23897 <463003665@qq.com>

Co-authored-by: Alexis DUBURCQ <alexis.duburcq@gmail.com>

Co-authored-by: Alexis Duburcq <alexis.duburcq@wandercraft.eu>

* update atari.py

* fix setup.py

pass the pytest

* fix setup.py

pass the pytest

* add args "render"

* change the tensorboard writter

* change the tensorboard writter

* change device, render, tensorboard log location

* change device, render, tensorboard log location

* remove some wrong local files

* fix some tab mistakes and the envs name in continuous/test_xx.py

* add examples and point robot maze environment

* fix some bugs during testing examples

* add dqn network and fix some args

* change back the tensorboard writter's frequency to ensure ppo and a2c can write things normally

* add a warning to collector

* rm some unrelated files

* reformat

* fix a bug in test_dqn due to the model wrong selection

* change atari frame skip and observation to improve performance

* readd some files

* change import

* modified readme

* rm tensorboard log

* update atari and mujoco which are ignored

* rm the wrong lines

* readd the import of PointMaze

* fix a typo in test/discrete/net.py

* add a class declaration to pass the flake8

* fix flake8 errors