Changes:

- Disclaimer in README

- Replaced all occurences of Gym with Gymnasium

- Removed code that is now dead since we no longer need to support the

old step API

- Updated type hints to only allow new step API

- Increased required version of envpool to support Gymnasium

- Increased required version of PettingZoo to support Gymnasium

- Updated `PettingZooEnv` to only use the new step API, removed hack to

also support old API

- I had to add some `# type: ignore` comments, due to new type hinting

in Gymnasium. I'm not that familiar with type hinting but I believe that

the issue is on the Gymnasium side and we are looking into it.

- Had to update `MyTestEnv` to support `options` kwarg

- Skip NNI tests because they still use OpenAI Gym

- Also allow `PettingZooEnv` in vector environment

- Updated doc page about ReplayBuffer to also talk about terminated and

truncated flags.

Still need to do:

- Update the Jupyter notebooks in docs

- Check the entire code base for more dead code (from compatibility

stuff)

- Check the reset functions of all environments/wrappers in code base to

make sure they use the `options` kwarg

- Someone might want to check test_env_finite.py

- Is it okay to allow `PettingZooEnv` in vector environments? Might need

to update docs?

## implementation

I implemented HER solely as a replay buffer. It is done by temporarily

directly re-writing transitions storage (`self._meta`) during the

`sample_indices()` call. The original transitions are cached and will be

restored at the beginning of the next sampling or when other methods is

called. This will make sure that. for example, n-step return calculation

can be done without altering the policy.

There is also a problem with the original indices sampling. The sampled

indices are not guaranteed to be from different episodes. So I decided

to perform re-writing based on the episode. This guarantees that the

sampled transitions from the same episode will have the same re-written

goal. This also make the re-writing ratio calculation slightly differ

from the paper, but it won't be too different if there are many episodes

in the buffer.

In the current commit, HER replay buffer only support 'future' strategy

and online sampling. This is the best of HER in term of performance and

memory efficiency.

I also add a few more convenient replay buffers

(`HERVectorReplayBuffer`, `HERReplayBufferManager`), test env

(`MyGoalEnv`), gym wrapper (`TruncatedAsTerminated`), unit tests, and a

simple example (examples/offline/fetch_her_ddpg.py).

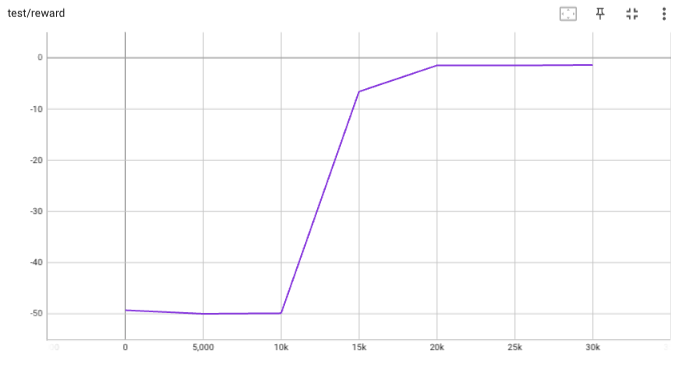

## verification

I have added unit tests for almost everything I have implemented.

HER replay buffer was also tested using DDPG on [`FetchReach-v3`

env](https://github.com/Farama-Foundation/Gymnasium-Robotics). I used

default DDPG parameters from mujoco example and didn't tune anything

further to get this good result! (train script:

examples/offline/fetch_her_ddpg.py).

* Changes to support Gym 0.26.0

* Replace map by simpler list comprehension

* Use syntax that is compatible with python 3.7

* Format code

* Fix environment seeding in test environment, fix buffer_profile test

* Remove self.seed() from __init__

* Fix random number generation

* Fix throughput tests

* Fix tests

* Removed done field from Buffer, fixed throughput test, turned off wandb, fixed formatting, fixed type hints, allow preprocessing_fn with truncated and terminated arguments, updated docstrings

* fix lint

* fix

* fix import

* fix

* fix mypy

* pytest --ignore='test/3rd_party'

* Use correct step API in _SetAttrWrapper

* Format

* Fix mypy

* Format

* Fix pydocstyle.

fixes some deprecation warnings due to new changes in gym version 0.23:

- use `env.np_random.integers` instead of `env.np_random.randint`

- support `seed` and `return_info` arguments for reset (addresses https://github.com/thu-ml/tianshou/issues/605)

- change the internal API name of worker: send_action -> send, get_result -> recv (align with envpool)

- add a timing test for venvs.reset() to make sure the concurrent execution

- change venvs.reset() logic

Co-authored-by: Jiayi Weng <trinkle23897@gmail.com>

This PR implements BCQPolicy, which could be used to train an offline agent in the environment of continuous action space. An experimental result 'halfcheetah-expert-v1' is provided, which is a d4rl environment (for Offline Reinforcement Learning).

Example usage is in the examples/offline/offline_bcq.py.

- Batch: do not raise error when it finds list of np.array with different shape[0].

- Venv's obs: add try...except block for np.stack(obs_list)

- remove venv.__del__ since it is buggy

Change the behavior of to_numpy and to_torch: from now on, dict is automatically converted to Batch and list is automatically converted to np.ndarray (if an error occurs, raise the exception instead of converting each element in the list).

This PR focus on refactor of logging method to solve bug of nan reward and log interval. After these two pr, hopefully fundamental change of tianshou/data is finished. We then can concentrate on building benchmarks of tianshou finally.

Things changed:

1. trainer now accepts logger (BasicLogger or LazyLogger) instead of writer;

2. remove utils.SummaryWriter;

This is the third PR of 6 commits mentioned in #274, which features refactor of Collector to fix#245. You can check #274 for more detail.

Things changed in this PR:

1. refactor collector to be more cleaner, split AsyncCollector to support asyncvenv;

2. change buffer.add api to add(batch, bffer_ids); add several types of buffer (VectorReplayBuffer, PrioritizedVectorReplayBuffer, etc.)

3. add policy.exploration_noise(act, batch) -> act

4. small change in BasePolicy.compute_*_returns

5. move reward_metric from collector to trainer

6. fix np.asanyarray issue (different version's numpy will result in different output)

7. flake8 maxlength=88

8. polish docs and fix test

Co-authored-by: n+e <trinkle23897@gmail.com>

This is the second commit of 6 commits mentioned in #274, which features minor refactor of ReplayBuffer and adding two new ReplayBuffer classes called CachedReplayBuffer and ReplayBufferManager. You can check #274 for more detail.

1. Add ReplayBufferManager (handle a list of buffers) and CachedReplayBuffer;

2. Make sure the reserved keys cannot be edited by methods like `buffer.done = xxx`;

3. Add `set_batch` method for manually choosing the batch the ReplayBuffer wants to handle;

4. Add `sample_index` method, same as `sample` but only return index instead of both index and batch data;

5. Add `prev` (one-step previous transition index), `next` (one-step next transition index) and `unfinished_index` (the last modified index whose done==False);

6. Separate `alloc_fn` method for allocating new memory for `self._meta` when a new `(key, value)` pair comes in;

7. Move buffer's documentation to `docs/tutorials/concepts.rst`.

Co-authored-by: n+e <trinkle23897@gmail.com>

This is the first commit of 6 commits mentioned in #274, which features

1. Refactor of `Class Net` to support any form of MLP.

2. Enable type check in utils.network.

3. Relative change in docs/test/examples.

4. Move atari-related network to examples/atari/atari_network.py

Co-authored-by: Trinkle23897 <trinkle23897@gmail.com>

This is the PR for C51algorithm: https://arxiv.org/abs/1707.06887

1. add C51 policy in tianshou/policy/modelfree/c51.py.

2. add C51 net in tianshou/utils/net/discrete.py.

3. add C51 atari example in examples/atari/atari_c51.py.

4. add C51 statement in tianshou/policy/__init__.py.

5. add C51 test in test/discrete/test_c51.py.

6. add C51 atari results in examples/atari/results/c51/.

By running "python3 atari_c51.py --task "PongNoFrameskip-v4" --batch-size 64", get best_result': '20.50 ± 0.50', in epoch 9.

By running "python3 atari_c51.py --task "BreakoutNoFrameskip-v4" --n-step 1 --epoch 40", get best_reward: 407.400000 ± 31.155096 in epoch 39.

This PR separates the `global_step` into `env_step` and `gradient_step`. In the future, the data from the collecting state will be stored under `env_step`, and the data from the updating state will be stored under `gradient_step`.

Others:

- add `rew_std` and `best_result` into the monitor

- fix network unbounded in `test/continuous/test_sac_with_il.py` and `examples/box2d/bipedal_hardcore_sac.py`

- change the dependency of ray to 1.0.0 since ray-project/ray#10134 has been resolved

Training FPS improvement (base commit is 94bfb32):

test_pdqn: 1660 (without numba) -> 1930

discrete/test_ppo: 5100 -> 5170

since nstep has little impact on overall performance, the unit test result is:

GAE: 4.1s -> 0.057s

nstep: 0.3s -> 0.15s (little improvement)

Others:

- fix a bug in ttt set_eps

- keep only sumtree in segment tree implementation

- dirty fix for asyncVenv check_id test

This PR aims to provide the script of Atari DQN setting:

- A speedrun of PongNoFrameskip-v4 (finished, about half an hour in i7-8750 + GTX1060 with 1M environment steps)

- A general script for all atari game

Since we use multiple env for simulation, the result is slightly different from the original paper, but consider to be acceptable.

It also adds another parameter save_only_last_obs for replay buffer in order to save the memory.

Co-authored-by: Trinkle23897 <463003665@qq.com>

1. add policy.eval() in all test scripts' "watch performance"

2. remove dict return support for collector preprocess_fn

3. add `__contains__` and `pop` in batch: `key in batch`, `batch.pop(key, deft)`

4. exact n_episode for a list of n_episode limitation and save fake data in cache_buffer when self.buffer is None (#184)

5. fix tensorboard logging: h-axis stands for env step instead of gradient step; add test results into tensorboard

6. add test_returns (both GAE and nstep)

7. change the type-checking order in batch.py and converter.py in order to meet the most often case first

8. fix shape inconsistency for torch.Tensor in replay buffer

9. remove `**kwargs` in ReplayBuffer

10. remove default value in batch.split() and add merge_last argument (#185)

11. improve nstep efficiency

12. add max_batchsize in onpolicy algorithms

13. potential bugfix for subproc.wait

14. fix RecurrentActorProb

15. improve the code-coverage (from 90% to 95%) and remove the dead code

16. fix some incorrect type annotation

The above improvement also increases the training FPS: on my computer, the previous version is only ~1800 FPS and after that, it can reach ~2050 (faster than v0.2.4.post1).

- Refacor code to remove duplicate code

- Enable async simulation for all vector envs

- Remove `collector.close` and rename `VectorEnv` to `DummyVectorEnv`

The abstraction of vector env changed.

Prior to this pr, each vector env is almost independent.

After this pr, each env is wrapped into a worker, and vector envs differ with their worker type. In fact, users can just use `BaseVectorEnv` with different workers, I keep `SubprocVectorEnv`, `ShmemVectorEnv` for backward compatibility.

Co-authored-by: n+e <463003665@qq.com>

Co-authored-by: magicly <magicly007@gmail.com>

* add policy.update to enable post process and remove collector.sample

* update doc in policy concept

* remove collector.sample in doc

* doc update of concepts

* docs

* polish

* polish policy

* remove collector.sample in docs

* minor fix

* Apply suggestions from code review

just a test

* doc fix

Co-authored-by: Trinkle23897 <463003665@qq.com>