Addresses part of #1015

### Dependencies

- move jsonargparse and docstring-parser to dependencies to run hl

examples without dev

- create mujoco-py extra for legacy mujoco envs

- updated atari extra

- removed atari-py and gym dependencies

- added ALE-py, autorom, and shimmy

- created robotics extra for HER-DDPG

### Mac specific

- only install envpool when not on mac

- mujoco-py not working on macOS newer than Monterey

(https://github.com/openai/mujoco-py/issues/777)

- D4RL also fails due to dependency on mujoco-py

(https://github.com/Farama-Foundation/D4RL/issues/232)

### Other

- reduced training-num/test-num in example files to a number ≤ 20

(examples with 100 led to too many open files)

- rendering for Mujoco envs needs to be fixed on gymnasium side

(https://github.com/Farama-Foundation/Gymnasium/issues/749)

---------

Co-authored-by: Maximilian Huettenrauch <m.huettenrauch@appliedai.de>

Co-authored-by: Michael Panchenko <35432522+MischaPanch@users.noreply.github.com>

Closes#947

This removes all kwargs from all policy constructors. While doing that,

I also improved several names and added a whole lot of TODOs.

## Functional changes:

1. Added possibility to pass None as `critic2` and `critic2_optim`. In

fact, the default behavior then should cover the absolute majority of

cases

2. Added a function called `clone_optimizer` as a temporary measure to

support passing `critic2_optim=None`

## Breaking changes:

1. `action_space` is no longer optional. In fact, it already was

non-optional, as there was a ValueError in BasePolicy.init. So now

several examples were fixed to reflect that

2. `reward_normalization` removed from DDPG and children. It was never

allowed to pass it as `True` there, an error would have been raised in

`compute_n_step_reward`. Now I removed it from the interface

3. renamed `critic1` and similar to `critic`, in order to have uniform

interfaces. Note that the `critic` in DDPG was optional for the sole

reason that child classes used `critic1`. I removed this optionality

(DDPG can't do anything with `critic=None`)

4. Several renamings of fields (mostly private to public, so backwards

compatible)

## Additional changes:

1. Removed type and default declaration from docstring. This kind of

duplication is really not necessary

2. Policy constructors are now only called using named arguments, not a

fragile mixture of positional and named as before

5. Minor beautifications in typing and code

6. Generally shortened docstrings and made them uniform across all

policies (hopefully)

## Comment:

With these changes, several problems in tianshou's inheritance hierarchy

become more apparent. I tried highlighting them for future work.

---------

Co-authored-by: Dominik Jain <d.jain@appliedai.de>

Close#941

rtfd build link:

https://readthedocs.org/projects/tianshou/builds/22019877/

Also -- fix two small issues reported by users, see #928 and #930

Note: I created the branch in thu-ml:tianshou instead of

Trinkle23897:tianshou to quickly check the rtfd build. It's not a good

process since every commit would trigger twice CI pipelines :(

Changes:

- Disclaimer in README

- Replaced all occurences of Gym with Gymnasium

- Removed code that is now dead since we no longer need to support the

old step API

- Updated type hints to only allow new step API

- Increased required version of envpool to support Gymnasium

- Increased required version of PettingZoo to support Gymnasium

- Updated `PettingZooEnv` to only use the new step API, removed hack to

also support old API

- I had to add some `# type: ignore` comments, due to new type hinting

in Gymnasium. I'm not that familiar with type hinting but I believe that

the issue is on the Gymnasium side and we are looking into it.

- Had to update `MyTestEnv` to support `options` kwarg

- Skip NNI tests because they still use OpenAI Gym

- Also allow `PettingZooEnv` in vector environment

- Updated doc page about ReplayBuffer to also talk about terminated and

truncated flags.

Still need to do:

- Update the Jupyter notebooks in docs

- Check the entire code base for more dead code (from compatibility

stuff)

- Check the reset functions of all environments/wrappers in code base to

make sure they use the `options` kwarg

- Someone might want to check test_env_finite.py

- Is it okay to allow `PettingZooEnv` in vector environments? Might need

to update docs?

IMHO, unit tests, compared with integration tests or end-to-end tests or

other tests, often means something that only tests a single

method/function/class/etc, and often has a lot of stubs and mocks so it

is far from a typical/real usage scenario. On the other hand,

integration tests or e2e tests mock less and are more like the real

case.

Tianshou says:

> ... tests include the full agent training procedure for all of the

implemented algorithms

It seems that this is more than unit test, and falls into the category

of integration or even e2e tests.

## implementation

I implemented HER solely as a replay buffer. It is done by temporarily

directly re-writing transitions storage (`self._meta`) during the

`sample_indices()` call. The original transitions are cached and will be

restored at the beginning of the next sampling or when other methods is

called. This will make sure that. for example, n-step return calculation

can be done without altering the policy.

There is also a problem with the original indices sampling. The sampled

indices are not guaranteed to be from different episodes. So I decided

to perform re-writing based on the episode. This guarantees that the

sampled transitions from the same episode will have the same re-written

goal. This also make the re-writing ratio calculation slightly differ

from the paper, but it won't be too different if there are many episodes

in the buffer.

In the current commit, HER replay buffer only support 'future' strategy

and online sampling. This is the best of HER in term of performance and

memory efficiency.

I also add a few more convenient replay buffers

(`HERVectorReplayBuffer`, `HERReplayBufferManager`), test env

(`MyGoalEnv`), gym wrapper (`TruncatedAsTerminated`), unit tests, and a

simple example (examples/offline/fetch_her_ddpg.py).

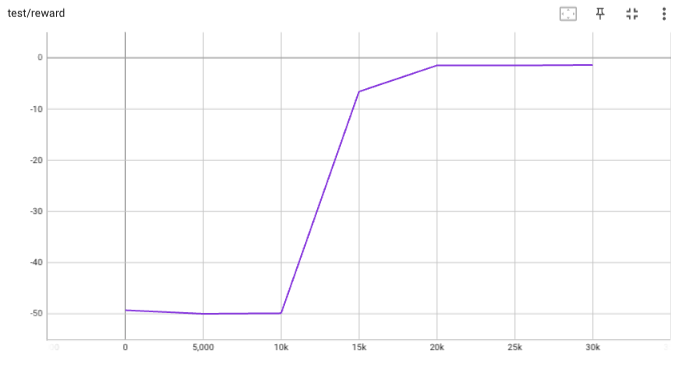

## verification

I have added unit tests for almost everything I have implemented.

HER replay buffer was also tested using DDPG on [`FetchReach-v3`

env](https://github.com/Farama-Foundation/Gymnasium-Robotics). I used

default DDPG parameters from mujoco example and didn't tune anything

further to get this good result! (train script:

examples/offline/fetch_her_ddpg.py).

- This PR adds the checks that are defined in the Makefile as pre-commit

hooks.

- Hopefully, the checks are equivalent to those from the Makefile, but I

can't guarantee it.

- CI remains as it is.

- As I pointed out on discord, I experienced some conflicts between

flake8 and yapf, so it might be better to transition to some other

combination (e.g. black).

This PR implements BCQPolicy, which could be used to train an offline agent in the environment of continuous action space. An experimental result 'halfcheetah-expert-v1' is provided, which is a d4rl environment (for Offline Reinforcement Learning).

Example usage is in the examples/offline/offline_bcq.py.