Initial Commit

This commit is contained in:

commit

fb5c21557a

134

.gitignore

vendored

Normal file

134

.gitignore

vendored

Normal file

@ -0,0 +1,134 @@

|

||||

#

|

||||

*.sh

|

||||

logdir*

|

||||

vis_*

|

||||

|

||||

# Byte-compiled / optimized / DLL files

|

||||

__pycache__/

|

||||

*.py[cod]

|

||||

*$py.class

|

||||

|

||||

# C extensions

|

||||

*.so

|

||||

|

||||

# Distribution / packaging

|

||||

.Python

|

||||

build/

|

||||

develop-eggs/

|

||||

dist/

|

||||

downloads/

|

||||

eggs/

|

||||

.eggs/

|

||||

lib/

|

||||

lib64/

|

||||

parts/

|

||||

sdist/

|

||||

var/

|

||||

wheels/

|

||||

pip-wheel-metadata/

|

||||

share/python-wheels/

|

||||

*.egg-info/

|

||||

.installed.cfg

|

||||

*.egg

|

||||

MANIFEST

|

||||

|

||||

# PyInstaller

|

||||

# Usually these files are written by a python script from a template

|

||||

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

||||

*.manifest

|

||||

*.spec

|

||||

|

||||

# Installer logs

|

||||

pip-log.txt

|

||||

pip-delete-this-directory.txt

|

||||

|

||||

# Unit test / coverage reports

|

||||

htmlcov/

|

||||

.tox/

|

||||

.nox/

|

||||

.coverage

|

||||

.coverage.*

|

||||

.cache

|

||||

nosetests.xml

|

||||

coverage.xml

|

||||

*.cover

|

||||

*.py,cover

|

||||

.hypothesis/

|

||||

.pytest_cache/

|

||||

|

||||

# Translations

|

||||

*.mo

|

||||

*.pot

|

||||

|

||||

# Django stuff:

|

||||

*.log

|

||||

local_settings.py

|

||||

db.sqlite3

|

||||

db.sqlite3-journal

|

||||

|

||||

# Flask stuff:

|

||||

instance/

|

||||

.webassets-cache

|

||||

|

||||

# Scrapy stuff:

|

||||

.scrapy

|

||||

|

||||

# Sphinx documentation

|

||||

docs/_build/

|

||||

|

||||

# PyBuilder

|

||||

target/

|

||||

|

||||

# Jupyter Notebook

|

||||

.ipynb_checkpoints

|

||||

|

||||

# IPython

|

||||

profile_default/

|

||||

ipython_config.py

|

||||

|

||||

# pyenv

|

||||

.python-version

|

||||

|

||||

# pipenv

|

||||

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

||||

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

||||

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

||||

# install all needed dependencies.

|

||||

#Pipfile.lock

|

||||

|

||||

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

||||

__pypackages__/

|

||||

|

||||

# Celery stuff

|

||||

celerybeat-schedule

|

||||

celerybeat.pid

|

||||

|

||||

# SageMath parsed files

|

||||

*.sage.py

|

||||

|

||||

# Environments

|

||||

.env

|

||||

.venv

|

||||

env/

|

||||

venv/

|

||||

ENV/

|

||||

env.bak/

|

||||

venv.bak/

|

||||

|

||||

# Spyder project settings

|

||||

.spyderproject

|

||||

.spyproject

|

||||

|

||||

# Rope project settings

|

||||

.ropeproject

|

||||

|

||||

# mkdocs documentation

|

||||

/site

|

||||

|

||||

# mypy

|

||||

.mypy_cache/

|

||||

.dmypy.json

|

||||

dmypy.json

|

||||

|

||||

# Pyre type checker

|

||||

.pyre/

|

||||

21

LICENSE

Normal file

21

LICENSE

Normal file

@ -0,0 +1,21 @@

|

||||

MIT License

|

||||

|

||||

Copyright (c) 2023 NM512

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

in the Software without restriction, including without limitation the rights

|

||||

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

||||

copies of the Software, and to permit persons to whom the Software is

|

||||

furnished to do so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in all

|

||||

copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

||||

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

||||

SOFTWARE.

|

||||

33

README.md

Normal file

33

README.md

Normal file

@ -0,0 +1,33 @@

|

||||

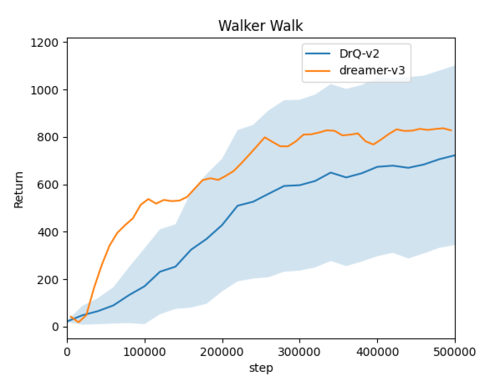

# Dreamer-v3 Pytorch

|

||||

Pytorch implementation of [Mastering Diverse Domains through World Models](https://arxiv.org/abs/2301.04104v1)

|

||||

|

||||

|

||||

|

||||

## Instructions

|

||||

Get dependencies:

|

||||

```

|

||||

pip install -r requirements.txt

|

||||

```

|

||||

Train the agent:

|

||||

```

|

||||

python3 dreamer.py --configs defaults --logdir $ABSOLUTEPATH_TO_SAVE_LOG

|

||||

```

|

||||

Monitor results:

|

||||

```

|

||||

tensorboard --logdir $ABSOLUTEPATH_TO_SAVE_LOG

|

||||

```

|

||||

## Evaluation Results

|

||||

work-in-progress

|

||||

|

||||

|

||||

|

||||

## Awesome Environments used for testing:

|

||||

- Deepmind control suite: https://github.com/deepmind/dm_control

|

||||

- will be added soon

|

||||

|

||||

## Acknowledgments

|

||||

This code is heavily inspired by the following works:

|

||||

- danijar's Dreamer-v2 tensorflow implementation: https://github.com/danijar/dreamerv2

|

||||

- jsikyoon's Dreamer-v2 pytorch implementation: https://github.com/jsikyoon/dreamer-torch

|

||||

- RajGhugare19's Dreamer-v2 pytorch implementation: https://github.com/RajGhugare19/dreamerv2

|

||||

- denisyarats's DrQ-v2 original implementation: https://github.com/facebookresearch/drqv2

|

||||

136

configs.yaml

Normal file

136

configs.yaml

Normal file

@ -0,0 +1,136 @@

|

||||

defaults:

|

||||

|

||||

logdir: null

|

||||

traindir: null

|

||||

evaldir: null

|

||||

offline_traindir: ''

|

||||

offline_evaldir: ''

|

||||

seed: 0

|

||||

steps: 5e5

|

||||

eval_every: 1e4

|

||||

log_every: 1e4

|

||||

reset_every: 0

|

||||

#gpu_growth: True

|

||||

device: 'cuda:0'

|

||||

precision: 16

|

||||

debug: False

|

||||

expl_gifs: False

|

||||

|

||||

# Environment

|

||||

task: 'dmc_walker_walk'

|

||||

size: [64, 64]

|

||||

envs: 1

|

||||

action_repeat: 2

|

||||

time_limit: 1000

|

||||

grayscale: False

|

||||

prefill: 2500

|

||||

eval_noise: 0.0

|

||||

reward_trans: 'symlog'

|

||||

obs_trans: 'normalize'

|

||||

critic_trans: 'symlog'

|

||||

reward_EMA: True

|

||||

|

||||

# Model

|

||||

dyn_cell: 'gru_layer_norm'

|

||||

dyn_hidden: 512

|

||||

dyn_deter: 512

|

||||

dyn_stoch: 32

|

||||

dyn_discrete: 32

|

||||

dyn_input_layers: 1

|

||||

dyn_output_layers: 1

|

||||

dyn_rec_depth: 1

|

||||

dyn_shared: False

|

||||

dyn_mean_act: 'none'

|

||||

dyn_std_act: 'sigmoid2'

|

||||

dyn_min_std: 0.1

|

||||

dyn_temp_post: True

|

||||

grad_heads: ['image', 'reward', 'discount']

|

||||

units: 256

|

||||

reward_layers: 2

|

||||

discount_layers: 2

|

||||

value_layers: 2

|

||||

actor_layers: 2

|

||||

act: 'SiLU'

|

||||

norm: 'LayerNorm'

|

||||

cnn_depth: 32

|

||||

encoder_kernels: [3, 3, 3, 3]

|

||||

decoder_kernels: [3, 3, 3, 3]

|

||||

# changed here

|

||||

value_head: 'twohot'

|

||||

reward_head: 'twohot'

|

||||

kl_lscale: '0.1'

|

||||

kl_rscale: '0.5'

|

||||

kl_free: '1.0'

|

||||

kl_forward: False

|

||||

pred_discount: True

|

||||

discount_scale: 1.0

|

||||

reward_scale: 1.0

|

||||

weight_decay: 0.0

|

||||

unimix_ratio: 0.01

|

||||

|

||||

# Training

|

||||

batch_size: 16

|

||||

batch_length: 64

|

||||

train_every: 5

|

||||

train_steps: 1

|

||||

pretrain: 100

|

||||

model_lr: 1e-4

|

||||

opt_eps: 1e-8

|

||||

grad_clip: 1000

|

||||

value_lr: 3e-5

|

||||

actor_lr: 3e-5

|

||||

ac_opt_eps: 1e-5

|

||||

value_grad_clip: 100

|

||||

actor_grad_clip: 100

|

||||

dataset_size: 0

|

||||

oversample_ends: False

|

||||

slow_value_target: True

|

||||

slow_actor_target: True

|

||||

slow_target_update: 50

|

||||

slow_target_fraction: 0.01

|

||||

opt: 'adam'

|

||||

|

||||

# Behavior.

|

||||

discount: 0.997

|

||||

discount_lambda: 0.95

|

||||

imag_horizon: 15

|

||||

imag_gradient: 'dynamics'

|

||||

imag_gradient_mix: '0.1'

|

||||

imag_sample: True

|

||||

actor_dist: 'trunc_normal'

|

||||

actor_entropy: '3e-4'

|

||||

actor_state_entropy: 0.0

|

||||

actor_init_std: 1.0

|

||||

actor_min_std: 0.1

|

||||

actor_disc: 5

|

||||

actor_temp: 0.1

|

||||

actor_outscale: 0.0

|

||||

expl_amount: 0.0

|

||||

eval_state_mean: False

|

||||

collect_dyn_sample: True

|

||||

behavior_stop_grad: True

|

||||

value_decay: 0.0

|

||||

future_entropy: False

|

||||

|

||||

# Exploration

|

||||

expl_behavior: 'greedy'

|

||||

expl_until: 0

|

||||

expl_extr_scale: 0.0

|

||||

expl_intr_scale: 1.0

|

||||

disag_target: 'stoch'

|

||||

disag_log: True

|

||||

disag_models: 10

|

||||

disag_offset: 1

|

||||

disag_layers: 4

|

||||

disag_units: 400

|

||||

disag_action_cond: False

|

||||

|

||||

debug:

|

||||

|

||||

debug: True

|

||||

pretrain: 1

|

||||

prefill: 1

|

||||

train_steps: 1

|

||||

batch_size: 10

|

||||

batch_length: 20

|

||||

|

||||

343

dreamer.py

Normal file

343

dreamer.py

Normal file

@ -0,0 +1,343 @@

|

||||

import argparse

|

||||

import collections

|

||||

import functools

|

||||

import os

|

||||

import pathlib

|

||||

import sys

|

||||

import warnings

|

||||

|

||||

os.environ["MUJOCO_GL"] = "egl"

|

||||

|

||||

import numpy as np

|

||||

import ruamel.yaml as yaml

|

||||

|

||||

sys.path.append(str(pathlib.Path(__file__).parent))

|

||||

|

||||

import exploration as expl

|

||||

import models

|

||||

import tools

|

||||

import wrappers

|

||||

|

||||

import torch

|

||||

from torch import nn

|

||||

from torch import distributions as torchd

|

||||

|

||||

to_np = lambda x: x.detach().cpu().numpy()

|

||||

|

||||

|

||||

class Dreamer(nn.Module):

|

||||

def __init__(self, config, logger, dataset):

|

||||

super(Dreamer, self).__init__()

|

||||

self._config = config

|

||||

self._logger = logger

|

||||

self._should_log = tools.Every(config.log_every)

|

||||

self._should_train = tools.Every(config.train_every)

|

||||

self._should_pretrain = tools.Once()

|

||||

self._should_reset = tools.Every(config.reset_every)

|

||||

self._should_expl = tools.Until(int(config.expl_until / config.action_repeat))

|

||||

self._metrics = {}

|

||||

self._step = count_steps(config.traindir)

|

||||

# Schedules.

|

||||

config.actor_entropy = lambda x=config.actor_entropy: tools.schedule(

|

||||

x, self._step

|

||||

)

|

||||

config.actor_state_entropy = (

|

||||

lambda x=config.actor_state_entropy: tools.schedule(x, self._step)

|

||||

)

|

||||

config.imag_gradient_mix = lambda x=config.imag_gradient_mix: tools.schedule(

|

||||

x, self._step

|

||||

)

|

||||

self._dataset = dataset

|

||||

self._wm = models.WorldModel(self._step, config)

|

||||

self._task_behavior = models.ImagBehavior(

|

||||

config, self._wm, config.behavior_stop_grad

|

||||

)

|

||||

reward = lambda f, s, a: self._wm.heads["reward"](f).mean

|

||||

self._expl_behavior = dict(

|

||||

greedy=lambda: self._task_behavior,

|

||||

random=lambda: expl.Random(config),

|

||||

plan2explore=lambda: expl.Plan2Explore(config, self._wm, reward),

|

||||

)[config.expl_behavior]()

|

||||

|

||||

def __call__(self, obs, reset, state=None, reward=None, training=True):

|

||||

step = self._step

|

||||

if self._should_reset(step):

|

||||

state = None

|

||||

if state is not None and reset.any():

|

||||

mask = 1 - reset

|

||||

for key in state[0].keys():

|

||||

for i in range(state[0][key].shape[0]):

|

||||

state[0][key][i] *= mask[i]

|

||||

for i in range(len(state[1])):

|

||||

state[1][i] *= mask[i]

|

||||

if training and self._should_train(step):

|

||||

steps = (

|

||||

self._config.pretrain

|

||||

if self._should_pretrain()

|

||||

else self._config.train_steps

|

||||

)

|

||||

for _ in range(steps):

|

||||

self._train(next(self._dataset))

|

||||

if self._should_log(step):

|

||||

for name, values in self._metrics.items():

|

||||

self._logger.scalar(name, float(np.mean(values)))

|

||||

self._metrics[name] = []

|

||||

openl = self._wm.video_pred(next(self._dataset))

|

||||

self._logger.video("train_openl", to_np(openl))

|

||||

self._logger.write(fps=True)

|

||||

|

||||

policy_output, state = self._policy(obs, state, training)

|

||||

|

||||

if training:

|

||||

self._step += len(reset)

|

||||

self._logger.step = self._config.action_repeat * self._step

|

||||

return policy_output, state

|

||||

|

||||

def _policy(self, obs, state, training):

|

||||

if state is None:

|

||||

batch_size = len(obs["image"])

|

||||

latent = self._wm.dynamics.initial(len(obs["image"]))

|

||||

action = torch.zeros((batch_size, self._config.num_actions)).to(

|

||||

self._config.device

|

||||

)

|

||||

else:

|

||||

latent, action = state

|

||||

embed = self._wm.encoder(self._wm.preprocess(obs))

|

||||

latent, _ = self._wm.dynamics.obs_step(

|

||||

latent, action, embed, self._config.collect_dyn_sample

|

||||

)

|

||||

if self._config.eval_state_mean:

|

||||

latent["stoch"] = latent["mean"]

|

||||

feat = self._wm.dynamics.get_feat(latent)

|

||||

if not training:

|

||||

actor = self._task_behavior.actor(feat)

|

||||

action = actor.mode()

|

||||

elif self._should_expl(self._step):

|

||||

actor = self._expl_behavior.actor(feat)

|

||||

action = actor.sample()

|

||||

else:

|

||||

actor = self._task_behavior.actor(feat)

|

||||

action = actor.sample()

|

||||

logprob = actor.log_prob(action)

|

||||

latent = {k: v.detach() for k, v in latent.items()}

|

||||

action = action.detach()

|

||||

if self._config.actor_dist == "onehot_gumble":

|

||||

action = torch.one_hot(

|

||||

torch.argmax(action, dim=-1), self._config.num_actions

|

||||

)

|

||||

action = self._exploration(action, training)

|

||||

policy_output = {"action": action, "logprob": logprob}

|

||||

state = (latent, action)

|

||||

return policy_output, state

|

||||

|

||||

def _exploration(self, action, training):

|

||||

amount = self._config.expl_amount if training else self._config.eval_noise

|

||||

if amount == 0:

|

||||

return action

|

||||

if "onehot" in self._config.actor_dist:

|

||||

probs = amount / self._config.num_actions + (1 - amount) * action

|

||||

return tools.OneHotDist(probs=probs).sample()

|

||||

else:

|

||||

return torch.clip(torchd.normal.Normal(action, amount).sample(), -1, 1)

|

||||

raise NotImplementedError(self._config.action_noise)

|

||||

|

||||

def _train(self, data):

|

||||

metrics = {}

|

||||

post, context, mets = self._wm._train(data)

|

||||

metrics.update(mets)

|

||||

start = post

|

||||

if self._config.pred_discount: # Last step could be terminal.

|

||||

start = {k: v[:, :-1] for k, v in post.items()}

|

||||

context = {k: v[:, :-1] for k, v in context.items()}

|

||||

reward = lambda f, s, a: self._wm.heads["reward"](

|

||||

self._wm.dynamics.get_feat(s)

|

||||

).mode()

|

||||

metrics.update(self._task_behavior._train(start, reward)[-1])

|

||||

if self._config.expl_behavior != "greedy":

|

||||

if self._config.pred_discount:

|

||||

data = {k: v[:, :-1] for k, v in data.items()}

|

||||

mets = self._expl_behavior.train(start, context, data)[-1]

|

||||

metrics.update({"expl_" + key: value for key, value in mets.items()})

|

||||

for name, value in metrics.items():

|

||||

if not name in self._metrics.keys():

|

||||

self._metrics[name] = [value]

|

||||

else:

|

||||

self._metrics[name].append(value)

|

||||

|

||||

|

||||

def count_steps(folder):

|

||||

return sum(int(str(n).split("-")[-1][:-4]) - 1 for n in folder.glob("*.npz"))

|

||||

|

||||

|

||||

def make_dataset(episodes, config):

|

||||

generator = tools.sample_episodes(

|

||||

episodes, config.batch_length, config.oversample_ends

|

||||

)

|

||||

dataset = tools.from_generator(generator, config.batch_size)

|

||||

return dataset

|

||||

|

||||

|

||||

def make_env(config, logger, mode, train_eps, eval_eps):

|

||||

suite, task = config.task.split("_", 1)

|

||||

if suite == "dmc":

|

||||

env = wrappers.DeepMindControl(task, config.action_repeat, config.size)

|

||||

env = wrappers.NormalizeActions(env)

|

||||

elif suite == "atari":

|

||||

env = wrappers.Atari(

|

||||

task,

|

||||

config.action_repeat,

|

||||

config.size,

|

||||

grayscale=config.grayscale,

|

||||

life_done=False and ("train" in mode),

|

||||

sticky_actions=True,

|

||||

all_actions=True,

|

||||

)

|

||||

env = wrappers.OneHotAction(env)

|

||||

elif suite == "dmlab":

|

||||

env = wrappers.DeepMindLabyrinth(

|

||||

task, mode if "train" in mode else "test", config.action_repeat

|

||||

)

|

||||

env = wrappers.OneHotAction(env)

|

||||

else:

|

||||

raise NotImplementedError(suite)

|

||||

env = wrappers.TimeLimit(env, config.time_limit)

|

||||

env = wrappers.SelectAction(env, key="action")

|

||||

if (mode == "train") or (mode == "eval"):

|

||||

callbacks = [

|

||||

functools.partial(

|

||||

process_episode, config, logger, mode, train_eps, eval_eps

|

||||

)

|

||||

]

|

||||

env = wrappers.CollectDataset(env, callbacks)

|

||||

env = wrappers.RewardObs(env)

|

||||

return env

|

||||

|

||||

|

||||

def process_episode(config, logger, mode, train_eps, eval_eps, episode):

|

||||

directory = dict(train=config.traindir, eval=config.evaldir)[mode]

|

||||

cache = dict(train=train_eps, eval=eval_eps)[mode]

|

||||

filename = tools.save_episodes(directory, [episode])[0]

|

||||

length = len(episode["reward"]) - 1

|

||||

score = float(episode["reward"].astype(np.float64).sum())

|

||||

video = episode["image"]

|

||||

if mode == "eval":

|

||||

cache.clear()

|

||||

if mode == "train" and config.dataset_size:

|

||||

total = 0

|

||||

for key, ep in reversed(sorted(cache.items(), key=lambda x: x[0])):

|

||||

if total <= config.dataset_size - length:

|

||||

total += len(ep["reward"]) - 1

|

||||

else:

|

||||

del cache[key]

|

||||

logger.scalar("dataset_size", total + length)

|

||||

cache[str(filename)] = episode

|

||||

print(f"{mode.title()} episode has {length} steps and return {score:.1f}.")

|

||||

logger.scalar(f"{mode}_return", score)

|

||||

logger.scalar(f"{mode}_length", length)

|

||||

logger.scalar(f"{mode}_episodes", len(cache))

|

||||

if mode == "eval" or config.expl_gifs:

|

||||

logger.video(f"{mode}_policy", video[None])

|

||||

logger.write()

|

||||

|

||||

|

||||

def main(config):

|

||||

logdir = pathlib.Path(config.logdir).expanduser()

|

||||

config.traindir = config.traindir or logdir / "train_eps"

|

||||

config.evaldir = config.evaldir or logdir / "eval_eps"

|

||||

config.steps //= config.action_repeat

|

||||

config.eval_every //= config.action_repeat

|

||||

config.log_every //= config.action_repeat

|

||||

config.time_limit //= config.action_repeat

|

||||

config.act = getattr(torch.nn, config.act)

|

||||

config.norm = getattr(torch.nn, config.norm)

|

||||

|

||||

print("Logdir", logdir)

|

||||

logdir.mkdir(parents=True, exist_ok=True)

|

||||

config.traindir.mkdir(parents=True, exist_ok=True)

|

||||

config.evaldir.mkdir(parents=True, exist_ok=True)

|

||||

step = count_steps(config.traindir)

|

||||

logger = tools.Logger(logdir, config.action_repeat * step)

|

||||

|

||||

print("Create envs.")

|

||||

if config.offline_traindir:

|

||||

directory = config.offline_traindir.format(**vars(config))

|

||||

else:

|

||||

directory = config.traindir

|

||||

train_eps = tools.load_episodes(directory, limit=config.dataset_size)

|

||||

if config.offline_evaldir:

|

||||

directory = config.offline_evaldir.format(**vars(config))

|

||||

else:

|

||||

directory = config.evaldir

|

||||

eval_eps = tools.load_episodes(directory, limit=1)

|

||||

make = lambda mode: make_env(config, logger, mode, train_eps, eval_eps)

|

||||

train_envs = [make("train") for _ in range(config.envs)]

|

||||

eval_envs = [make("eval") for _ in range(config.envs)]

|

||||

acts = train_envs[0].action_space

|

||||

config.num_actions = acts.n if hasattr(acts, "n") else acts.shape[0]

|

||||

|

||||

if not config.offline_traindir:

|

||||

prefill = max(0, config.prefill - count_steps(config.traindir))

|

||||

print(f"Prefill dataset ({prefill} steps).")

|

||||

if hasattr(acts, "discrete"):

|

||||

random_actor = tools.OneHotDist(

|

||||

torch.zeros_like(torch.Tensor(acts.low))[None]

|

||||

)

|

||||

else:

|

||||

random_actor = torchd.independent.Independent(

|

||||

torchd.uniform.Uniform(

|

||||

torch.Tensor(acts.low)[None], torch.Tensor(acts.high)[None]

|

||||

),

|

||||

1,

|

||||

)

|

||||

|

||||

def random_agent(o, d, s, r):

|

||||

action = random_actor.sample()

|

||||

logprob = random_actor.log_prob(action)

|

||||

return {"action": action, "logprob": logprob}, None

|

||||

|

||||

tools.simulate(random_agent, train_envs, prefill)

|

||||

tools.simulate(random_agent, eval_envs, episodes=1)

|

||||

logger.step = config.action_repeat * count_steps(config.traindir)

|

||||

|

||||

print("Simulate agent.")

|

||||

train_dataset = make_dataset(train_eps, config)

|

||||

eval_dataset = make_dataset(eval_eps, config)

|

||||

agent = Dreamer(config, logger, train_dataset).to(config.device)

|

||||

agent.requires_grad_(requires_grad=False)

|

||||

if (logdir / "latest_model.pt").exists():

|

||||

agent.load_state_dict(torch.load(logdir / "latest_model.pt"))

|

||||

agent._should_pretrain._once = False

|

||||

|

||||

state = None

|

||||

while agent._step < config.steps:

|

||||

logger.write()

|

||||

print("Start evaluation.")

|

||||

video_pred = agent._wm.video_pred(next(eval_dataset))

|

||||

logger.video("eval_openl", to_np(video_pred))

|

||||

eval_policy = functools.partial(agent, training=False)

|

||||

tools.simulate(eval_policy, eval_envs, episodes=1)

|

||||

print("Start training.")

|

||||

state = tools.simulate(agent, train_envs, config.eval_every, state=state)

|

||||

torch.save(agent.state_dict(), logdir / "latest_model.pt")

|

||||

for env in train_envs + eval_envs:

|

||||

try:

|

||||

env.close()

|

||||

except Exception:

|

||||

pass

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

parser = argparse.ArgumentParser()

|

||||

parser.add_argument("--configs", nargs="+", required=True)

|

||||

args, remaining = parser.parse_known_args()

|

||||

configs = yaml.safe_load(

|

||||

(pathlib.Path(sys.argv[0]).parent / "configs.yaml").read_text()

|

||||

)

|

||||

defaults = {}

|

||||

for name in args.configs:

|

||||

defaults.update(configs[name])

|

||||

parser = argparse.ArgumentParser()

|

||||

for key, value in sorted(defaults.items(), key=lambda x: x[0]):

|

||||

arg_type = tools.args_type(value)

|

||||

parser.add_argument(f"--{key}", type=arg_type, default=arg_type(value))

|

||||

main(parser.parse_args(remaining))

|

||||

108

exploration.py

Normal file

108

exploration.py

Normal file

@ -0,0 +1,108 @@

|

||||

import torch

|

||||

from torch import nn

|

||||

from torch import distributions as torchd

|

||||

|

||||

import models

|

||||

import networks

|

||||

import tools

|

||||

|

||||

|

||||

class Random(nn.Module):

|

||||

def __init__(self, config):

|

||||

self._config = config

|

||||

|

||||

def actor(self, feat):

|

||||

shape = feat.shape[:-1] + [self._config.num_actions]

|

||||

if self._config.actor_dist == "onehot":

|

||||

return tools.OneHotDist(torch.zeros(shape))

|

||||

else:

|

||||

ones = torch.ones(shape)

|

||||

return tools.ContDist(torchd.uniform.Uniform(-ones, ones))

|

||||

|

||||

def train(self, start, context):

|

||||

return None, {}

|

||||

|

||||

|

||||

# class Plan2Explore(tools.Module):

|

||||

class Plan2Explore(nn.Module):

|

||||

def __init__(self, config, world_model, reward=None):

|

||||

self._config = config

|

||||

self._reward = reward

|

||||

self._behavior = models.ImagBehavior(config, world_model)

|

||||

self.actor = self._behavior.actor

|

||||

stoch_size = config.dyn_stoch

|

||||

if config.dyn_discrete:

|

||||

stoch_size *= config.dyn_discrete

|

||||

size = {

|

||||

"embed": 32 * config.cnn_depth,

|

||||

"stoch": stoch_size,

|

||||

"deter": config.dyn_deter,

|

||||

"feat": config.dyn_stoch + config.dyn_deter,

|

||||

}[self._config.disag_target]

|

||||

kw = dict(

|

||||

inp_dim=config.dyn_stoch, # pytorch version

|

||||

shape=size,

|

||||

layers=config.disag_layers,

|

||||

units=config.disag_units,

|

||||

act=config.act,

|

||||

)

|

||||

self._networks = [networks.DenseHead(**kw) for _ in range(config.disag_models)]

|

||||

self._opt = tools.optimizer(

|

||||

config.opt,

|

||||

self.parameters(),

|

||||

config.model_lr,

|

||||

config.opt_eps,

|

||||

config.weight_decay,

|

||||

)

|

||||

# self._opt = tools.Optimizer(

|

||||

# 'ensemble', config.model_lr, config.opt_eps, config.grad_clip,

|

||||

# config.weight_decay, opt=config.opt)

|

||||

|

||||

def train(self, start, context, data):

|

||||

metrics = {}

|

||||

stoch = start["stoch"]

|

||||

if self._config.dyn_discrete:

|

||||

stoch = tf.reshape(

|

||||

stoch, stoch.shape[:-2] + (stoch.shape[-2] * stoch.shape[-1])

|

||||

)

|

||||

target = {

|

||||

"embed": context["embed"],

|

||||

"stoch": stoch,

|

||||

"deter": start["deter"],

|

||||

"feat": context["feat"],

|

||||

}[self._config.disag_target]

|

||||

inputs = context["feat"]

|

||||

if self._config.disag_action_cond:

|

||||

inputs = tf.concat([inputs, data["action"]], -1)

|

||||

metrics.update(self._train_ensemble(inputs, target))

|

||||

metrics.update(self._behavior.train(start, self._intrinsic_reward)[-1])

|

||||

return None, metrics

|

||||

|

||||

def _intrinsic_reward(self, feat, state, action):

|

||||

inputs = feat

|

||||

if self._config.disag_action_cond:

|

||||

inputs = tf.concat([inputs, action], -1)

|

||||

preds = [head(inputs, tf.float32).mean() for head in self._networks]

|

||||

disag = tf.reduce_mean(tf.math.reduce_std(preds, 0), -1)

|

||||

if self._config.disag_log:

|

||||

disag = tf.math.log(disag)

|

||||

reward = self._config.expl_intr_scale * disag

|

||||

if self._config.expl_extr_scale:

|

||||

reward += tf.cast(

|

||||

self._config.expl_extr_scale * self._reward(feat, state, action),

|

||||

tf.float32,

|

||||

)

|

||||

return reward

|

||||

|

||||

def _train_ensemble(self, inputs, targets):

|

||||

if self._config.disag_offset:

|

||||

targets = targets[:, self._config.disag_offset :]

|

||||

inputs = inputs[:, : -self._config.disag_offset]

|

||||

targets = tf.stop_gradient(targets)

|

||||

inputs = tf.stop_gradient(inputs)

|

||||

with tf.GradientTape() as tape:

|

||||

preds = [head(inputs) for head in self._networks]

|

||||

likes = [tf.reduce_mean(pred.log_prob(targets)) for pred in preds]

|

||||

loss = -tf.cast(tf.reduce_sum(likes), tf.float32)

|

||||

metrics = self._opt(tape, loss, self._networks)

|

||||

return metrics

|

||||

509

models.py

Normal file

509

models.py

Normal file

@ -0,0 +1,509 @@

|

||||

import copy

|

||||

import torch

|

||||

from torch import nn

|

||||

import numpy as np

|

||||

from PIL import ImageColor, Image, ImageDraw, ImageFont

|

||||

|

||||

import networks

|

||||

import tools

|

||||

|

||||

to_np = lambda x: x.detach().cpu().numpy()

|

||||

|

||||

|

||||

def symlog(x):

|

||||

return torch.sign(x) * torch.log(torch.abs(x) + 1.0)

|

||||

|

||||

|

||||

def symexp(x):

|

||||

return torch.sign(x) * (torch.exp(torch.abs(x)) - 1.0)

|

||||

|

||||

|

||||

class RewardEMA(object):

|

||||

"""running mean and std"""

|

||||

|

||||

def __init__(self, device, alpha=1e-2):

|

||||

self.device = device

|

||||

self.scale = torch.zeros((1,)).to(device)

|

||||

self.alpha = alpha

|

||||

self.range = torch.tensor([0.05, 0.95]).to(device)

|

||||

|

||||

def __call__(self, x):

|

||||

flat_x = torch.flatten(x.detach())

|

||||

x_quantile = torch.quantile(input=flat_x, q=self.range)

|

||||

scale = x_quantile[1] - x_quantile[0]

|

||||

new_scale = self.alpha * scale + (1 - self.alpha) * self.scale

|

||||

self.scale = new_scale

|

||||

return x / torch.clip(self.scale, min=1.0)

|

||||

|

||||

|

||||

class WorldModel(nn.Module):

|

||||

def __init__(self, step, config):

|

||||

super(WorldModel, self).__init__()

|

||||

self._step = step

|

||||

self._use_amp = True if config.precision == 16 else False

|

||||

self._config = config

|

||||

self.encoder = networks.ConvEncoder(

|

||||

config.grayscale,

|

||||

config.cnn_depth,

|

||||

config.act,

|

||||

config.norm,

|

||||

config.encoder_kernels,

|

||||

)

|

||||

if config.size[0] == 64 and config.size[1] == 64:

|

||||

embed_size = (

|

||||

(64 // 2 ** (len(config.encoder_kernels))) ** 2

|

||||

* config.cnn_depth

|

||||

* 2 ** (len(config.encoder_kernels) - 1)

|

||||

)

|

||||

else:

|

||||

raise NotImplemented(f"{config.size} is not applicable now")

|

||||

self.dynamics = networks.RSSM(

|

||||

config.dyn_stoch,

|

||||

config.dyn_deter,

|

||||

config.dyn_hidden,

|

||||

config.dyn_input_layers,

|

||||

config.dyn_output_layers,

|

||||

config.dyn_rec_depth,

|

||||

config.dyn_shared,

|

||||

config.dyn_discrete,

|

||||

config.act,

|

||||

config.norm,

|

||||

config.dyn_mean_act,

|

||||

config.dyn_std_act,

|

||||

config.dyn_temp_post,

|

||||

config.dyn_min_std,

|

||||

config.dyn_cell,

|

||||

config.unimix_ratio,

|

||||

config.num_actions,

|

||||

embed_size,

|

||||

config.device,

|

||||

)

|

||||

self.heads = nn.ModuleDict()

|

||||

channels = 1 if config.grayscale else 3

|

||||

shape = (channels,) + config.size

|

||||

if config.dyn_discrete:

|

||||

feat_size = config.dyn_stoch * config.dyn_discrete + config.dyn_deter

|

||||

else:

|

||||

feat_size = config.dyn_stoch + config.dyn_deter

|

||||

self.heads["image"] = networks.ConvDecoder(

|

||||

feat_size, # pytorch version

|

||||

config.cnn_depth,

|

||||

config.act,

|

||||

config.norm,

|

||||

shape,

|

||||

config.decoder_kernels,

|

||||

)

|

||||

if config.reward_head == "twohot":

|

||||

self.heads["reward"] = networks.DenseHead(

|

||||

feat_size, # pytorch version

|

||||

(255,),

|

||||

config.reward_layers,

|

||||

config.units,

|

||||

config.act,

|

||||

config.norm,

|

||||

dist=config.reward_head,

|

||||

)

|

||||

else:

|

||||

self.heads["reward"] = networks.DenseHead(

|

||||

feat_size, # pytorch version

|

||||

[],

|

||||

config.reward_layers,

|

||||

config.units,

|

||||

config.act,

|

||||

config.norm,

|

||||

dist=config.reward_head,

|

||||

)

|

||||

# added this

|

||||

self.heads["reward"].apply(tools.weight_init)

|

||||

if config.pred_discount:

|

||||

self.heads["discount"] = networks.DenseHead(

|

||||

feat_size, # pytorch version

|

||||

[],

|

||||

config.discount_layers,

|

||||

config.units,

|

||||

config.act,

|

||||

config.norm,

|

||||

dist="binary",

|

||||

)

|

||||

for name in config.grad_heads:

|

||||

assert name in self.heads, name

|

||||

self._model_opt = tools.Optimizer(

|

||||

"model",

|

||||

self.parameters(),

|

||||

config.model_lr,

|

||||

config.opt_eps,

|

||||

config.grad_clip,

|

||||

config.weight_decay,

|

||||

opt=config.opt,

|

||||

use_amp=self._use_amp,

|

||||

)

|

||||

self._scales = dict(reward=config.reward_scale, discount=config.discount_scale)

|

||||

|

||||

def _train(self, data):

|

||||

# action (batch_size, batch_length, act_dim)

|

||||

# image (batch_size, batch_length, h, w, ch)

|

||||

# reward (batch_size, batch_length)

|

||||

# discount (batch_size, batch_length)

|

||||

data = self.preprocess(data)

|

||||

|

||||

with tools.RequiresGrad(self):

|

||||

with torch.cuda.amp.autocast(self._use_amp):

|

||||

embed = self.encoder(data)

|

||||

post, prior = self.dynamics.observe(embed, data["action"])

|

||||

kl_free = tools.schedule(self._config.kl_free, self._step)

|

||||

kl_lscale = tools.schedule(self._config.kl_lscale, self._step)

|

||||

kl_rscale = tools.schedule(self._config.kl_rscale, self._step)

|

||||

kl_loss, kl_value, loss_lhs, loss_rhs = self.dynamics.kl_loss(

|

||||

post, prior, self._config.kl_forward, kl_free, kl_lscale, kl_rscale

|

||||

)

|

||||

losses = {}

|

||||

likes = {}

|

||||

for name, head in self.heads.items():

|

||||

grad_head = name in self._config.grad_heads

|

||||

feat = self.dynamics.get_feat(post)

|

||||

feat = feat if grad_head else feat.detach()

|

||||

pred = head(feat)

|

||||

# if name == 'image':

|

||||

# losses[name] = torch.nn.functional.mse_loss(pred.mode(), data[name], 'sum')

|

||||

like = pred.log_prob(data[name])

|

||||

likes[name] = like

|

||||

losses[name] = -torch.mean(like) * self._scales.get(name, 1.0)

|

||||

model_loss = sum(losses.values()) + kl_loss

|

||||

metrics = self._model_opt(model_loss, self.parameters())

|

||||

|

||||

metrics.update({f"{name}_loss": to_np(loss) for name, loss in losses.items()})

|

||||

metrics["kl_free"] = kl_free

|

||||

metrics["kl_lscale"] = kl_lscale

|

||||

metrics["kl_rscale"] = kl_rscale

|

||||

metrics["loss_lhs"] = to_np(loss_lhs)

|

||||

metrics["loss_rhs"] = to_np(loss_rhs)

|

||||

metrics["kl"] = to_np(torch.mean(kl_value))

|

||||

with torch.cuda.amp.autocast(self._use_amp):

|

||||

metrics["prior_ent"] = to_np(

|

||||

torch.mean(self.dynamics.get_dist(prior).entropy())

|

||||

)

|

||||

metrics["post_ent"] = to_np(

|

||||

torch.mean(self.dynamics.get_dist(post).entropy())

|

||||

)

|

||||

context = dict(

|

||||

embed=embed,

|

||||

feat=self.dynamics.get_feat(post),

|

||||

kl=kl_value,

|

||||

postent=self.dynamics.get_dist(post).entropy(),

|

||||

)

|

||||

post = {k: v.detach() for k, v in post.items()}

|

||||

return post, context, metrics

|

||||

|

||||

def preprocess(self, obs):

|

||||

obs = obs.copy()

|

||||

if self._config.obs_trans == "normalize":

|

||||

obs["image"] = torch.Tensor(obs["image"]) / 255.0 - 0.5

|

||||

elif self._config.obs_trans == "identity":

|

||||

obs["image"] = torch.Tensor(obs["image"])

|

||||

elif self._config.obs_trans == "symlog":

|

||||

obs["image"] = symlog(torch.Tensor(obs["image"]))

|

||||

else:

|

||||

raise NotImplemented(f"{self._config.reward_trans} is not implemented")

|

||||

if self._config.reward_trans == "tanh":

|

||||

# (batch_size, batch_length) -> (batch_size, batch_length, 1)

|

||||

obs["reward"] = torch.tanh(torch.Tensor(obs["reward"])).unsqueeze(-1)

|

||||

elif self._config.reward_trans == "identity":

|

||||

# (batch_size, batch_length) -> (batch_size, batch_length, 1)

|

||||

obs["reward"] = torch.Tensor(obs["reward"]).unsqueeze(-1)

|

||||

elif self._config.reward_trans == "symlog":

|

||||

obs["reward"] = symlog(torch.Tensor(obs["reward"])).unsqueeze(-1)

|

||||

else:

|

||||

raise NotImplemented(f"{self._config.reward_trans} is not implemented")

|

||||

if "discount" in obs:

|

||||

obs["discount"] *= self._config.discount

|

||||

# (batch_size, batch_length) -> (batch_size, batch_length, 1)

|

||||

obs["discount"] = torch.Tensor(obs["discount"]).unsqueeze(-1)

|

||||

obs = {k: torch.Tensor(v).to(self._config.device) for k, v in obs.items()}

|

||||

return obs

|

||||

|

||||

def video_pred(self, data):

|

||||

data = self.preprocess(data)

|

||||

embed = self.encoder(data)

|

||||

|

||||

states, _ = self.dynamics.observe(embed[:6, :5], data["action"][:6, :5])

|

||||

recon = self.heads["image"](self.dynamics.get_feat(states)).mode()[:6]

|

||||

reward_post = self.heads["reward"](self.dynamics.get_feat(states)).mode()[:6]

|

||||

init = {k: v[:, -1] for k, v in states.items()}

|

||||

prior = self.dynamics.imagine(data["action"][:6, 5:], init)

|

||||

openl = self.heads["image"](self.dynamics.get_feat(prior)).mode()

|

||||

reward_prior = self.heads["reward"](self.dynamics.get_feat(prior)).mode()

|

||||

# observed image is given until 5 steps

|

||||

model = torch.cat([recon[:, :5], openl], 1)

|

||||

if self._config.obs_trans == "normalize":

|

||||

truth = data["image"][:6] + 0.5

|

||||

model += 0.5

|

||||

elif self._config.obs_trans == "symlog":

|

||||

truth = symexp(data["image"][:6]) / 255.0

|

||||

model = symexp(model) / 255.0

|

||||

error = (model - truth + 1) / 2

|

||||

|

||||

return torch.cat([truth, model, error], 2)

|

||||

|

||||

|

||||

class ImagBehavior(nn.Module):

|

||||

def __init__(self, config, world_model, stop_grad_actor=True, reward=None):

|

||||

super(ImagBehavior, self).__init__()

|

||||

self._use_amp = True if config.precision == 16 else False

|

||||

self._config = config

|

||||

self._world_model = world_model

|

||||

self._stop_grad_actor = stop_grad_actor

|

||||

self._reward = reward

|

||||

if config.dyn_discrete:

|

||||

feat_size = config.dyn_stoch * config.dyn_discrete + config.dyn_deter

|

||||

else:

|

||||

feat_size = config.dyn_stoch + config.dyn_deter

|

||||

self.actor = networks.ActionHead(

|

||||

feat_size, # pytorch version

|

||||

config.num_actions,

|

||||

config.actor_layers,

|

||||

config.units,

|

||||

config.act,

|

||||

config.norm,

|

||||

config.actor_dist,

|

||||

config.actor_init_std,

|

||||

config.actor_min_std,

|

||||

config.actor_dist,

|

||||

config.actor_temp,

|

||||

config.actor_outscale,

|

||||

) # action_dist -> action_disc?

|

||||

if config.value_head == "twohot":

|

||||

self.value = networks.DenseHead(

|

||||

feat_size, # pytorch version

|

||||

(255,),

|

||||

config.value_layers,

|

||||

config.units,

|

||||

config.act,

|

||||

config.norm,

|

||||

config.value_head,

|

||||

)

|

||||

else:

|

||||

self.value = networks.DenseHead(

|

||||

feat_size, # pytorch version

|

||||

[],

|

||||

config.value_layers,

|

||||

config.units,

|

||||

config.act,

|

||||

config.norm,

|

||||

config.value_head,

|

||||

)

|

||||

self.value.apply(tools.weight_init)

|

||||

if config.slow_value_target or config.slow_actor_target:

|

||||

self._slow_value = copy.deepcopy(self.value)

|

||||

self._updates = 0

|

||||

kw = dict(wd=config.weight_decay, opt=config.opt, use_amp=self._use_amp)

|

||||

self._actor_opt = tools.Optimizer(

|

||||

"actor",

|

||||

self.actor.parameters(),

|

||||

config.actor_lr,

|

||||

config.ac_opt_eps,

|

||||

config.actor_grad_clip,

|

||||

**kw,

|

||||

)

|

||||

self._value_opt = tools.Optimizer(

|

||||

"value",

|

||||

self.value.parameters(),

|

||||

config.value_lr,

|

||||

config.ac_opt_eps,

|

||||

config.value_grad_clip,

|

||||

**kw,

|

||||

)

|

||||

if self._config.reward_EMA:

|

||||

self.reward_ema = RewardEMA(device=self._config.device)

|

||||

|

||||

def _train(

|

||||

self,

|

||||

start,

|

||||

objective=None,

|

||||

action=None,

|

||||

reward=None,

|

||||

imagine=None,

|

||||

tape=None,

|

||||

repeats=None,

|

||||

):

|

||||

objective = objective or self._reward

|

||||

self._update_slow_target()

|

||||

metrics = {}

|

||||

|

||||

with tools.RequiresGrad(self.actor):

|

||||

with torch.cuda.amp.autocast(self._use_amp):

|

||||

imag_feat, imag_state, imag_action = self._imagine(

|

||||

start, self.actor, self._config.imag_horizon, repeats

|

||||

)

|

||||

reward = objective(imag_feat, imag_state, imag_action)

|

||||

if self._config.reward_trans == "symlog":

|

||||

# rescale predicted reward by head['reward']

|

||||

reward = symexp(reward)

|

||||

actor_ent = self.actor(imag_feat).entropy()

|

||||

state_ent = self._world_model.dynamics.get_dist(imag_state).entropy()

|

||||

# this target is not scaled

|

||||

# slow is flag to indicate whether slow_target is used for lambda-return

|

||||

target, weights = self._compute_target(

|

||||

imag_feat,

|

||||

imag_state,

|

||||

imag_action,

|

||||

reward,

|

||||

actor_ent,

|

||||

state_ent,

|

||||

self._config.slow_actor_target,

|

||||

)

|

||||

actor_loss, mets = self._compute_actor_loss(

|

||||

imag_feat,

|

||||

imag_state,

|

||||

imag_action,

|

||||

target,

|

||||

actor_ent,

|

||||

state_ent,

|

||||

weights,

|

||||

)

|

||||

metrics.update(mets)

|

||||

if self._config.slow_value_target != self._config.slow_actor_target:

|

||||

target, weights = self._compute_target(

|

||||

imag_feat,

|

||||

imag_state,

|

||||

imag_action,

|

||||

reward,

|

||||

actor_ent,

|

||||

state_ent,

|

||||

self._config.slow_value_target,

|

||||

)

|

||||

value_input = imag_feat

|

||||

|

||||

with tools.RequiresGrad(self.value):

|

||||

with torch.cuda.amp.autocast(self._use_amp):

|

||||

value = self.value(value_input[:-1].detach())

|

||||

target = torch.stack(target, dim=1)

|

||||

# only critic target is processed using symlog(not actor)

|

||||

if self._config.critic_trans == "symlog":

|

||||

metrics["unscaled_target_mean"] = to_np(torch.mean(target))

|

||||

target = symlog(target)

|

||||

# (time, batch, 1), (time, batch, 1) -> (time, batch)

|

||||

value_loss = -value.log_prob(target.detach())

|

||||

if self._config.value_decay:

|

||||

value_loss += self._config.value_decay * value.mode()

|

||||

# (time, batch, 1), (time, batch, 1) -> (1,)

|

||||

value_loss = torch.mean(weights[:-1] * value_loss[:, :, None])

|

||||

|

||||

metrics["value_mean"] = to_np(torch.mean(value.mode()))

|

||||

metrics["value_max"] = to_np(torch.max(value.mode()))

|

||||

metrics["value_min"] = to_np(torch.min(value.mode()))

|

||||

metrics["value_std"] = to_np(torch.std(value.mode()))

|

||||

metrics["target_mean"] = to_np(torch.mean(target))

|

||||

metrics["reward_mean"] = to_np(torch.mean(reward))

|

||||

metrics["reward_std"] = to_np(torch.std(reward))

|

||||

metrics["actor_ent"] = to_np(torch.mean(actor_ent))

|

||||

with tools.RequiresGrad(self):

|

||||

metrics.update(self._actor_opt(actor_loss, self.actor.parameters()))

|

||||

metrics.update(self._value_opt(value_loss, self.value.parameters()))

|

||||

return imag_feat, imag_state, imag_action, weights, metrics

|

||||

|

||||

def _imagine(self, start, policy, horizon, repeats=None):

|

||||

dynamics = self._world_model.dynamics

|

||||

if repeats:

|

||||

raise NotImplemented("repeats is not implemented in this version")

|

||||

flatten = lambda x: x.reshape([-1] + list(x.shape[2:]))

|

||||

start = {k: flatten(v) for k, v in start.items()}

|

||||

|

||||

def step(prev, _):

|

||||

state, _, _ = prev

|

||||

feat = dynamics.get_feat(state)

|

||||

inp = feat.detach() if self._stop_grad_actor else feat

|

||||

action = policy(inp).sample()

|

||||

succ = dynamics.img_step(state, action, sample=self._config.imag_sample)

|

||||

return succ, feat, action

|

||||

|

||||

feat = 0 * dynamics.get_feat(start)

|

||||

action = policy(feat).mode()

|

||||

succ, feats, actions = tools.static_scan(

|

||||

step, [torch.arange(horizon)], (start, feat, action)

|

||||

)

|

||||

states = {k: torch.cat([start[k][None], v[:-1]], 0) for k, v in succ.items()}

|

||||

if repeats:

|

||||

raise NotImplemented("repeats is not implemented in this version")

|

||||

|

||||

return feats, states, actions

|

||||

|

||||

def _compute_target(

|

||||

self, imag_feat, imag_state, imag_action, reward, actor_ent, state_ent, slow

|

||||

):

|

||||

if "discount" in self._world_model.heads:

|

||||

inp = self._world_model.dynamics.get_feat(imag_state)

|

||||

discount = self._world_model.heads["discount"](inp).mean

|

||||

else:

|

||||

discount = self._config.discount * torch.ones_like(reward)

|

||||

if self._config.future_entropy and self._config.actor_entropy() > 0:

|

||||

reward += self._config.actor_entropy() * actor_ent

|

||||

if self._config.future_entropy and self._config.actor_state_entropy() > 0:

|

||||

reward += self._config.actor_state_entropy() * state_ent

|

||||

if slow:

|

||||

value = self._slow_value(imag_feat).mode()

|

||||

else:

|

||||

value = self.value(imag_feat).mode()

|

||||

if self._config.critic_trans == "symlog":

|

||||

# After adding this line there is issue

|

||||

value = symexp(value)

|

||||

target = tools.lambda_return(

|

||||

reward[:-1],

|

||||

value[:-1],

|

||||

discount[:-1],

|

||||

bootstrap=value[-1],

|

||||

lambda_=self._config.discount_lambda,

|

||||

axis=0,

|

||||

)

|

||||

weights = torch.cumprod(

|

||||

torch.cat([torch.ones_like(discount[:1]), discount[:-1]], 0), 0

|

||||

).detach()

|

||||

return target, weights

|

||||

|

||||

def _compute_actor_loss(

|

||||

self, imag_feat, imag_state, imag_action, target, actor_ent, state_ent, weights

|

||||

):

|

||||

metrics = {}

|

||||

inp = imag_feat.detach() if self._stop_grad_actor else imag_feat

|

||||

policy = self.actor(inp)

|

||||

actor_ent = policy.entropy()

|

||||

# Q-val for actor is not transformed using symlog

|

||||

target = torch.stack(target, dim=1)

|

||||

if self._config.reward_EMA:

|

||||

target = self.reward_ema(target)

|

||||

metrics["EMA_scale"] = to_np(self.reward_ema.scale)

|

||||

|

||||

if self._config.imag_gradient == "dynamics":

|

||||

actor_target = target

|

||||

elif self._config.imag_gradient == "reinforce":

|

||||

actor_target = (

|

||||

policy.log_prob(imag_action)[:-1][:, :, None]

|

||||

* (target - self.value(imag_feat[:-1]).mode()).detach()

|

||||

)

|

||||

elif self._config.imag_gradient == "both":

|

||||

actor_target = (

|

||||

policy.log_prob(imag_action)[:-1][:, :, None]

|

||||

* (target - self.value(imag_feat[:-1]).mode()).detach()

|

||||

)

|

||||

mix = self._config.imag_gradient_mix()

|

||||

actor_target = mix * target + (1 - mix) * actor_target

|

||||

metrics["imag_gradient_mix"] = mix

|

||||

else:

|

||||

raise NotImplementedError(self._config.imag_gradient)

|

||||

if not self._config.future_entropy and (self._config.actor_entropy() > 0):

|

||||

actor_entropy = self._config.actor_entropy() * actor_ent[:-1][:, :, None]

|

||||

actor_target += actor_entropy

|

||||

metrics["actor_entropy"] = to_np(torch.mean(actor_entropy))

|

||||

if not self._config.future_entropy and (self._config.actor_state_entropy() > 0):

|

||||

state_entropy = self._config.actor_state_entropy() * state_ent[:-1]

|

||||

actor_target += state_entropy

|

||||

metrics["actor_state_entropy"] = to_np(torch.mean(state_entropy))

|

||||

actor_loss = -torch.mean(weights[:-1] * actor_target)

|

||||

return actor_loss, metrics

|

||||

|

||||

def _update_slow_target(self):

|

||||

if self._config.slow_value_target or self._config.slow_actor_target:

|

||||

if self._updates % self._config.slow_target_update == 0:

|

||||

mix = self._config.slow_target_fraction

|

||||

for s, d in zip(self.value.parameters(), self._slow_value.parameters()):

|

||||

d.data = mix * s.data + (1 - mix) * d.data

|

||||

self._updates += 1

|

||||

631

networks.py

Normal file

631

networks.py

Normal file

@ -0,0 +1,631 @@

|

||||

import math

|

||||

import numpy as np

|

||||

|

||||

import torch

|

||||

from torch import nn

|

||||

import torch.nn.functional as F

|

||||

from torch import distributions as torchd

|

||||

|

||||

import tools

|

||||

|

||||

|

||||

class RSSM(nn.Module):

|

||||

def __init__(

|

||||

self,

|

||||

stoch=30,

|

||||

deter=200,

|

||||

hidden=200,

|

||||

layers_input=1,

|

||||

layers_output=1,

|

||||

rec_depth=1,

|

||||

shared=False,

|

||||

discrete=False,

|

||||

act=nn.ELU,

|

||||

norm=nn.LayerNorm,

|

||||

mean_act="none",

|

||||

std_act="softplus",

|

||||

temp_post=True,

|

||||

min_std=0.1,

|

||||

cell="gru",

|

||||

unimix_ratio=0.01,

|

||||

num_actions=None,

|

||||

embed=None,

|

||||

device=None,

|

||||

):

|

||||

super(RSSM, self).__init__()

|

||||

self._stoch = stoch

|

||||

self._deter = deter

|

||||

self._hidden = hidden

|

||||

self._min_std = min_std

|

||||

self._layers_input = layers_input

|

||||

self._layers_output = layers_output

|

||||

self._rec_depth = rec_depth

|

||||

self._shared = shared

|

||||

self._discrete = discrete

|

||||

self._act = act

|

||||

self._norm = norm

|

||||

self._mean_act = mean_act

|

||||

self._std_act = std_act

|

||||

self._temp_post = temp_post

|

||||

self._unimix_ratio = unimix_ratio

|

||||

self._embed = embed

|

||||

self._device = device

|

||||

|

||||

inp_layers = []

|

||||

if self._discrete:

|

||||

inp_dim = self._stoch * self._discrete + num_actions

|

||||

else:

|

||||

inp_dim = self._stoch + num_actions

|

||||

if self._shared:

|

||||

inp_dim += self._embed

|

||||

for i in range(self._layers_input):

|

||||

inp_layers.append(nn.Linear(inp_dim, self._hidden))

|

||||

inp_layers.append(self._act())

|

||||

if i == 0:

|

||||

inp_dim = self._hidden

|

||||

self._inp_layers = nn.Sequential(*inp_layers)

|

||||

|

||||

if cell == "gru":

|

||||

self._cell = GRUCell(self._hidden, self._deter)

|

||||

elif cell == "gru_layer_norm":

|

||||

self._cell = GRUCell(self._hidden, self._deter, norm=True)

|

||||

else:

|

||||

raise NotImplementedError(cell)

|

||||

|

||||

img_out_layers = []

|

||||

inp_dim = self._deter

|

||||

for i in range(self._layers_output):

|

||||

img_out_layers.append(nn.Linear(inp_dim, self._hidden))

|

||||

img_out_layers.append(self._norm(self._hidden))

|

||||

img_out_layers.append(self._act())

|

||||

if i == 0:

|

||||

inp_dim = self._hidden

|

||||

self._img_out_layers = nn.Sequential(*img_out_layers)

|

||||

|

||||

obs_out_layers = []

|

||||

if self._temp_post:

|

||||

inp_dim = self._deter + self._embed

|

||||

else:

|

||||

inp_dim = self._embed

|

||||

for i in range(self._layers_output):

|

||||

obs_out_layers.append(nn.Linear(inp_dim, self._hidden))

|

||||

obs_out_layers.append(self._norm(self._hidden))

|

||||

obs_out_layers.append(self._act())

|

||||

if i == 0:

|

||||

inp_dim = self._hidden

|

||||

self._obs_out_layers = nn.Sequential(*obs_out_layers)

|

||||

|

||||

if self._discrete:

|

||||

self._ims_stat_layer = nn.Linear(self._hidden, self._stoch * self._discrete)

|

||||

self._obs_stat_layer = nn.Linear(self._hidden, self._stoch * self._discrete)

|

||||

else:

|

||||

self._ims_stat_layer = nn.Linear(self._hidden, 2 * self._stoch)

|

||||

self._obs_stat_layer = nn.Linear(self._hidden, 2 * self._stoch)

|

||||

|

||||

def initial(self, batch_size):

|

||||

deter = torch.zeros(batch_size, self._deter).to(self._device)

|

||||

if self._discrete:

|

||||

state = dict(

|

||||

logit=torch.zeros([batch_size, self._stoch, self._discrete]).to(

|

||||

self._device

|

||||

),

|

||||

stoch=torch.zeros([batch_size, self._stoch, self._discrete]).to(

|

||||

self._device

|

||||

),

|

||||

deter=deter,

|

||||

)

|

||||

else:

|

||||

state = dict(

|

||||

mean=torch.zeros([batch_size, self._stoch]).to(self._device),

|

||||

std=torch.zeros([batch_size, self._stoch]).to(self._device),

|

||||

stoch=torch.zeros([batch_size, self._stoch]).to(self._device),

|

||||

deter=deter,

|

||||

)

|

||||

return state

|

||||

|

||||

def observe(self, embed, action, state=None):

|

||||

swap = lambda x: x.permute([1, 0] + list(range(2, len(x.shape))))

|

||||

if state is None:

|

||||

state = self.initial(action.shape[0])

|

||||

# (batch, time, ch) -> (time, batch, ch)

|

||||

embed, action = swap(embed), swap(action)

|

||||

post, prior = tools.static_scan(

|

||||

lambda prev_state, prev_act, embed: self.obs_step(

|

||||

prev_state[0], prev_act, embed

|

||||

),

|

||||

(action, embed),

|

||||

(state, state),

|

||||

)

|

||||

|

||||

# (batch, time, stoch, discrete_num) -> (batch, time, stoch, discrete_num)

|

||||

post = {k: swap(v) for k, v in post.items()}

|

||||

prior = {k: swap(v) for k, v in prior.items()}

|

||||

return post, prior

|

||||

|

||||

def imagine(self, action, state=None):

|

||||

swap = lambda x: x.permute([1, 0] + list(range(2, len(x.shape))))

|

||||

if state is None:

|

||||

state = self.initial(action.shape[0])

|

||||

assert isinstance(state, dict), state

|

||||

action = action

|

||||

action = swap(action)

|

||||

prior = tools.static_scan(self.img_step, [action], state)

|

||||

prior = prior[0]

|

||||

prior = {k: swap(v) for k, v in prior.items()}

|

||||

return prior

|

||||

|

||||

def get_feat(self, state):

|

||||

stoch = state["stoch"]

|

||||

if self._discrete:

|

||||

shape = list(stoch.shape[:-2]) + [self._stoch * self._discrete]

|

||||

stoch = stoch.reshape(shape)

|

||||

return torch.cat([stoch, state["deter"]], -1)

|

||||

|

||||

def get_dist(self, state, dtype=None):

|

||||

if self._discrete:

|

||||

logit = state["logit"]

|

||||

dist = torchd.independent.Independent(

|

||||

tools.OneHotDist(logit, unimix_ratio=self._unimix_ratio), 1

|

||||

)

|

||||

else:

|

||||

mean, std = state["mean"], state["std"]

|

||||

dist = tools.ContDist(

|

||||

torchd.independent.Independent(torchd.normal.Normal(mean, std), 1)

|

||||

)

|

||||

return dist

|

||||

|

||||

def obs_step(self, prev_state, prev_action, embed, sample=True):

|

||||