# Changes

## Dependencies

- New extra "eval"

## Api Extension

- `Experiment` and `ExperimentConfig` now have a `name`, that can

however be overridden when `Experiment.run()` is called

- When building an `Experiment` from an `ExperimentConfig`, the user has

the option to add info about seeds to the name.

- New method in `ExperimentConfig` called

`build_default_seeded_experiments`

- `SamplingConfig` has an explicit training seed, `test_seed` is

inferred.

- New `evaluation` package for repeating the same experiment with

multiple seeds and aggregating the results (important extension!).

Currently in alpha state.

- Loggers can now restore the logged data into python by using the new

`restore_logged_data`

## Breaking Changes

- `AtariEnvFactory` (in examples) now receives explicit train and test

seeds

- `EnvFactoryRegistered` now requires an explicit `test_seed`

- `BaseLogger.prepare_dict_for_logging` is now abstract

---------

Co-authored-by: Maximilian Huettenrauch <m.huettenrauch@appliedai.de>

Co-authored-by: Michael Panchenko <m.panchenko@appliedai.de>

Co-authored-by: Michael Panchenko <35432522+MischaPanch@users.noreply.github.com>

This PR adds strict typing to the output of `update` and `learn` in all

policies. This will likely be the last large refactoring PR before the

next release (0.6.0, not 1.0.0), so it requires some attention. Several

difficulties were encountered on the path to that goal:

1. The policy hierarchy is actually "broken" in the sense that the keys

of dicts that were output by `learn` did not follow the same enhancement

(inheritance) pattern as the policies. This is a real problem and should

be addressed in the near future. Generally, several aspects of the

policy design and hierarchy might deserve a dedicated discussion.

2. Each policy needs to be generic in the stats return type, because one

might want to extend it at some point and then also extend the stats.

Even within the source code base this pattern is necessary in many

places.

3. The interaction between learn and update is a bit quirky, we

currently handle it by having update modify special field inside

TrainingStats, whereas all other fields are handled by learn.

4. The IQM module is a policy wrapper and required a

TrainingStatsWrapper. The latter relies on a bunch of black magic.

They were addressed by:

1. Live with the broken hierarchy, which is now made visible by bounds

in generics. We use type: ignore where appropriate.

2. Make all policies generic with bounds following the policy

inheritance hierarchy (which is incorrect, see above). We experimented a

bit with nested TrainingStats classes, but that seemed to add more

complexity and be harder to understand. Unfortunately, mypy thinks that

the code below is wrong, wherefore we have to add `type: ignore` to the

return of each `learn`

```python

T = TypeVar("T", bound=int)

def f() -> T:

return 3

```

3. See above

4. Write representative tests for the `TrainingStatsWrapper`. Still, the

black magic might cause nasty surprises down the line (I am not proud of

it)...

Closes#933

---------

Co-authored-by: Maximilian Huettenrauch <m.huettenrauch@appliedai.de>

Co-authored-by: Michael Panchenko <m.panchenko@appliedai.de>

Closes#947

This removes all kwargs from all policy constructors. While doing that,

I also improved several names and added a whole lot of TODOs.

## Functional changes:

1. Added possibility to pass None as `critic2` and `critic2_optim`. In

fact, the default behavior then should cover the absolute majority of

cases

2. Added a function called `clone_optimizer` as a temporary measure to

support passing `critic2_optim=None`

## Breaking changes:

1. `action_space` is no longer optional. In fact, it already was

non-optional, as there was a ValueError in BasePolicy.init. So now

several examples were fixed to reflect that

2. `reward_normalization` removed from DDPG and children. It was never

allowed to pass it as `True` there, an error would have been raised in

`compute_n_step_reward`. Now I removed it from the interface

3. renamed `critic1` and similar to `critic`, in order to have uniform

interfaces. Note that the `critic` in DDPG was optional for the sole

reason that child classes used `critic1`. I removed this optionality

(DDPG can't do anything with `critic=None`)

4. Several renamings of fields (mostly private to public, so backwards

compatible)

## Additional changes:

1. Removed type and default declaration from docstring. This kind of

duplication is really not necessary

2. Policy constructors are now only called using named arguments, not a

fragile mixture of positional and named as before

5. Minor beautifications in typing and code

6. Generally shortened docstrings and made them uniform across all

policies (hopefully)

## Comment:

With these changes, several problems in tianshou's inheritance hierarchy

become more apparent. I tried highlighting them for future work.

---------

Co-authored-by: Dominik Jain <d.jain@appliedai.de>

Closes#914

Additional changes:

- Deprecate python below 11

- Remove 3rd party and throughput tests. This simplifies install and

test pipeline

- Remove gym compatibility and shimmy

- Format with 3.11 conventions. In particular, add `zip(...,

strict=True/False)` where possible

Since the additional tests and gym were complicating the CI pipeline

(flaky and dist-dependent), it didn't make sense to work on fixing the

current tests in this PR to then just delete them in the next one. So

this PR changes the build and removes these tests at the same time.

Preparation for #914 and #920

Changes formatting to ruff and black. Remove python 3.8

## Additional Changes

- Removed flake8 dependencies

- Adjusted pre-commit. Now CI and Make use pre-commit, reducing the

duplication of linting calls

- Removed check-docstyle option (ruff is doing that)

- Merged format and lint. In CI the format-lint step fails if any

changes are done, so it fulfills the lint functionality.

---------

Co-authored-by: Jiayi Weng <jiayi@openai.com>

## implementation

I implemented HER solely as a replay buffer. It is done by temporarily

directly re-writing transitions storage (`self._meta`) during the

`sample_indices()` call. The original transitions are cached and will be

restored at the beginning of the next sampling or when other methods is

called. This will make sure that. for example, n-step return calculation

can be done without altering the policy.

There is also a problem with the original indices sampling. The sampled

indices are not guaranteed to be from different episodes. So I decided

to perform re-writing based on the episode. This guarantees that the

sampled transitions from the same episode will have the same re-written

goal. This also make the re-writing ratio calculation slightly differ

from the paper, but it won't be too different if there are many episodes

in the buffer.

In the current commit, HER replay buffer only support 'future' strategy

and online sampling. This is the best of HER in term of performance and

memory efficiency.

I also add a few more convenient replay buffers

(`HERVectorReplayBuffer`, `HERReplayBufferManager`), test env

(`MyGoalEnv`), gym wrapper (`TruncatedAsTerminated`), unit tests, and a

simple example (examples/offline/fetch_her_ddpg.py).

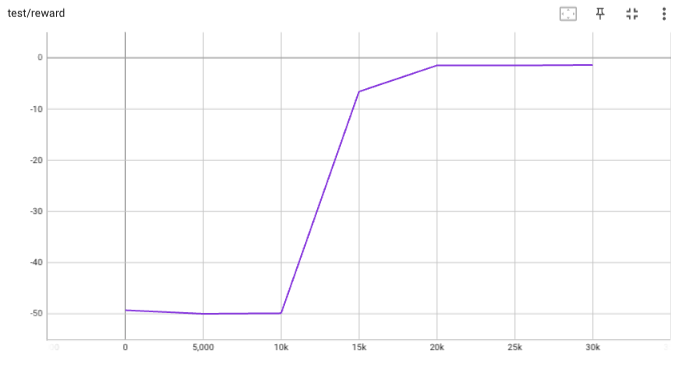

## verification

I have added unit tests for almost everything I have implemented.

HER replay buffer was also tested using DDPG on [`FetchReach-v3`

env](https://github.com/Farama-Foundation/Gymnasium-Robotics). I used

default DDPG parameters from mujoco example and didn't tune anything

further to get this good result! (train script:

examples/offline/fetch_her_ddpg.py).

- collector.collect() now returns 4 extra keys: rew/rew_std/len/len_std (previously this work is done in logger)

- save_fn() will be called at the beginning of trainer